Threadripper 1920X versus Ryzen 5900X on Linux and Windows

First generation Threadripper opened the workstation and HEDT markets to a wider audience. Some time after it was released I made a 1920X based PC for work on Linux and some gaming on Windows. With the release of Zen 3 I got the opportunity to compare 12 core Threadripper 1920X to a consumer 12 core Ryzen 5900X in productivity and game on Linux and Windows.

Test systems

| 1920X | 5900X | |

|---|---|---|

| RAM | 4 x 8 GB G.Skill Ripjaws V (3200MHz, CL15, F4-3200C15D) | 4 x 8 GB Patriot Viper Steel (4400MHz, CL19, PVS416G440C9K) Running at 3800 CL16 |

| Motherboard | Asrock X399M Taichi micro-ATX | Gigabyte B550 Aorus Master |

| GPU | Sapphire Vega 64 + Alphacool Eiswolf 240 GPX Pro | Gainward RTX 3070 |

| PSU | Chieftec PROTON BDF-1000C | Enermax Revolution 87+ 850W (80+ Gold) |

| CPU Cooler | Alphacool Eisbaer 240 (water AiO) | Alpenfohn Brocken 3 |

Both systems were installed in open-bench cases (Streacom BC1 for the Threadripper and custom V-Slot for the Ryzen platform). Tests were done on Windows 10 (latest version at the time of writing) and Ubuntu 20.10 and with a daily build of 21.04 (more on that later). For more accurate comparison some Linux Ryzen 5900X tests were also done with the Ripjaws RAM kit at 3200 CL15.

Windows benchmarks

Each of the CPUs and GPUs have already been reviewed. I wanted to put them head to head in a set of benchmarks to get a rough idea how much improvement 5900X offers over 1920X (and then how good old Vega performs versus latest Nvidia GPU). Here is a set of synthetic CPU benchmark results for both systems:

| 1920X | 5900X | Performance delta 5900X vs 1920X |

|

|---|---|---|---|

| V-Ray | 10381 | 16888 | 162,7% |

| Corona (seconds) | 93 | 57 | 163,2% |

| Cinebench R15 Single | 162 | 271 | 167,3% |

| Cinebench R15 | 2392 | 3608 | 150,8% |

| Cinebench R20 Single | 397 | 616 | 155,2% |

| Cinebench R20 | 5245 | 8222 | 156,8% |

| Cinebench R23 Single | 1044 | 1667 | 159,7% |

| Cinebench R23 | 13133 | 21788 | 165,9% |

| Novabench CPU Score | 2155 | 3579 | 166,1% |

| PassMark CPU Score | 24661,8 | 41590 | 168,6% |

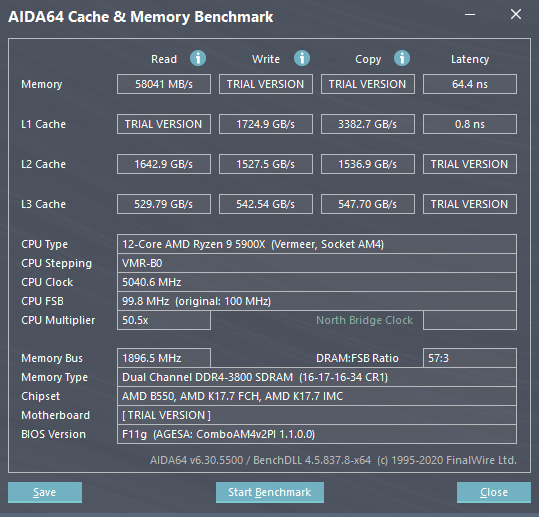

| Aida64 memory latency (ns) | 91,1 | 64,6 | 141,0% |

| Aida64 memory read (MB/s) | 75524 | 58139 | 77,0% |

| Geekbench 5 Single | 1035 | 1748 | 168,9% |

| Geekbench 5 | 10338 | 14859 | 143,7% |

| Time Spy CPU Score | 9854 | 14263 | 144,7% |

| Time Spy Extreme CPU Score | 4865 | 7496 | 154,1% |

| Night Raid CPU Score | 10093 | 16092 | 159,4% |

Quite solid wins of the Ryzen 9 5900X. The memory read is lower as Ryzen uses dual channel RAM configuration while Threadrippers use quad-channel configuration. Next we have some system benchmarks:

| 1920X + Vega 64 | 5900X + RTX 3070 | Performance delta | |

|---|---|---|---|

| PCMark 10 | 5837 | 8021 | 137,4% |

| PassMark | 5815,6 | 9200 | 158,2% |

| Novabench | 3547 | 5856 | 165,1% |

| Basemark | 1481 | 1566 | 105,7% |

Basemark is a browser based benchmark and it surfaced when some latest hardware results leaked to the public. The scores did differ a bit and even that 105% delta is questionable so I’m not so sure if Basemark can be used to put leaked scores into perspective vs other devies. Both systems were tested via MS Edge using Chrome engine. GFXBench didn't want to run on the Ryzen system due to some unspecified network error - that benchmark was also used for testing in some recent leaks. It's quite lightweight, even for Vega 64 or some iGPUs.

To end synthetic benchmarks - GPU/gaming benchmarks:

| 1920X + Vega 64 | 5900X + RTX 3070 | Performance delta | |

|---|---|---|---|

| Time Spy | 7572 | 13708 | 181,0% |

| Time Spy Extreme | 3634 | 6804 | 187,2% |

| Night Raid | 39399 | 64704 | 164,2% |

| Superposition 1080p Medium | 14120 | 24542 | 173,8% |

| Superposition 4K Optimized | 6026 | 11018 | 182,8% |

| DX12 Draw calls per second | 27263082 | 58248594 | 213,7% |

| DX11 MT Draw calls per second | 1416229 | 4643475 | 327,9% |

So in summary R9 5900X is around 60% better than 1920X. The Threadripper is 3 years older than the Zen 3 part so you can say AMD managed to pull 20% per year spent on Zen architecture. That's way more than what we got from Intel in the past years.

RTX 3070 is around 70%-80% faster than Vega 64. TechPowerUp database gives it 64% - and all of that while skipping on accelerated Ray Tracing, DLSS, VRS support and other features that the older GPU is missing.

I don't play many games, so I won't go over many games here, just those I focus on.

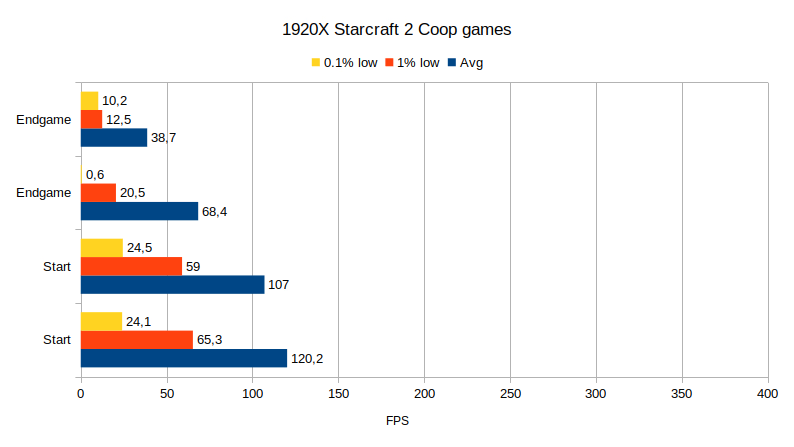

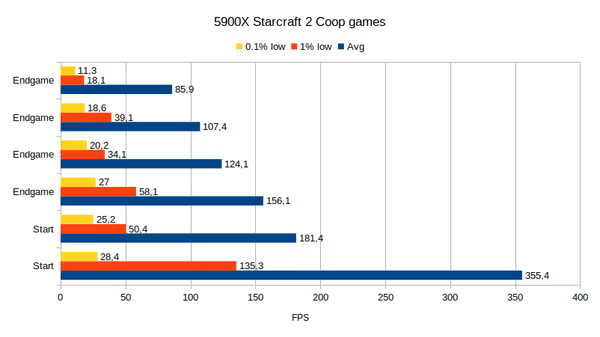

Starcraft 2

Starcraft 2 is really dated DX9 game and needs a high end CPU to perform well, which is very important for competitive players. All others playing Coop can suffer from stutter to nearly ~0 FPS if they engage in a clash of a large amount of units. If you want examples of bad frametimes this is the game to get data from.

I use MSI afterburner to record FPS data from start of a Coop games and from end-game combat moments where large armies clash. The results do differ from game to game but it's clear that 5900X offers around 60-100% better performance:

1% low or 0.1% low FPS isn't the best picture here. If we look at frametimes (recorded with CapFrameX) you can see some spikes on start of a game but way more unstable frametime in the end-game. The more variance in frametime the less fluid

the experience may be (especially with sudden big spikes):

The game was running on default high settings without any FPS limiter or VSync.

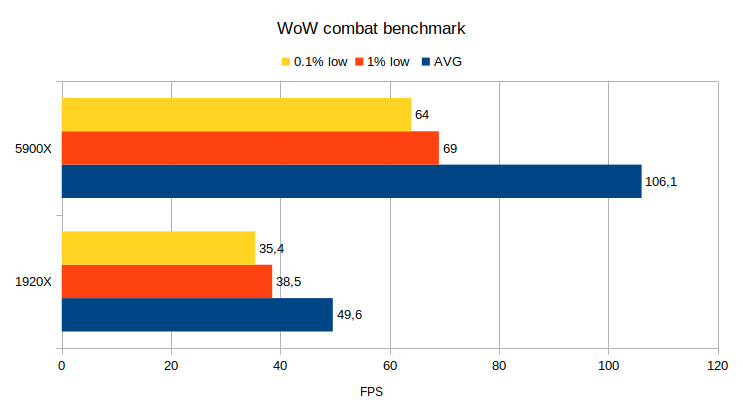

World of Warcraft

WoW is quite hard to benchmark as depending on scenario you are stressing a different part of the system. The most CPU bound element of the game is mass-actor combat, like raids, battlegrounds and sometimes multiple mobs casting simultaneously etc. I'm planning to do a separate WoW article for this system but here is a quick comparison of the two CPUs (mode 7, 3440x1440):

The performance is pretty much doubled in this edge-case scenario. To get consistent and comparable data for combat performance I use TBC Karazan large mob count pull that recreates worst case scenario

of actual raids quite well - by the sheer quantity of mobs and world state complexity they create, it's not about assets - unless you are running on an iGPU.

WoW uses few cores to offload draw calls and alike but world state and other tasks are still handled on the main

core so single core performance (and latency/memory timings) is extremely important for any large scale end game content. In a dungeon or even average medium-small raid group the performance will be better. In terms of core scaling you get nearly max performance on 4 cores. 2 cores is noticeably less while 6 cores sees barely any scaling - although that's just for the game running without any other app and addons. At 4C/4T i5-9400F configuration the all core load is already close to 100%. 6-8 core Zen 3 parts will be really good for this game, just like similar parts from Intel.

Temperature effect

Ryzen performance depends on the CPU temperature. If it has thermal headroom it will clock bit higher giving bit better results. GPU can also lower the clocks based on current temperature. As the system is in an open case there is no airflow restrictions but there is also no case airflow which may or may not be optimal to some components.

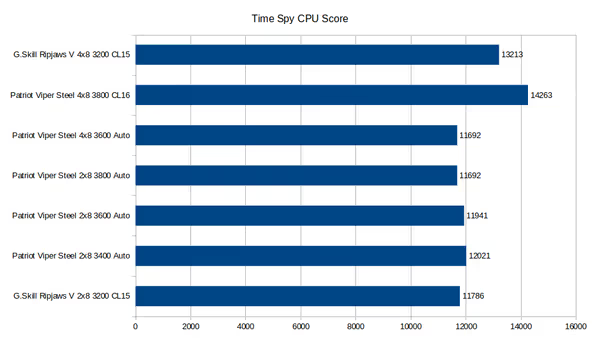

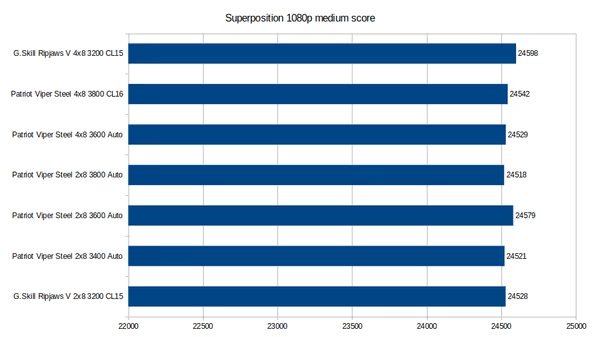

Using the winter air I tested the Ryzen system at home at room temperature

and on my balcony with ambient close to 0C. Time Spy score increased by 0,6% (if it's not within the margin of error), Superposition 1080p medium perform better by 2,3% when in cold air. Cinebench did improve, up to 6% on R23 single core (from 1572 to 1667 with the CPU set to Auto OC

in Ryzen Master).

The Unigine scores: hot and cold show that the Gainward RTX 3070 reached up to 2010MHz in cold air and 1965 MHz in warmer home air

.

The CPU score differences are likely due to insufficient mounting pressure of the radiator as later on it turned out some mounting screws weren't really tight (and the radiator wasn't that much warmer when the CPU was under full load - or the cooler is just not as good even though Alpenfohn Brocken 3 is massive and with two fans.

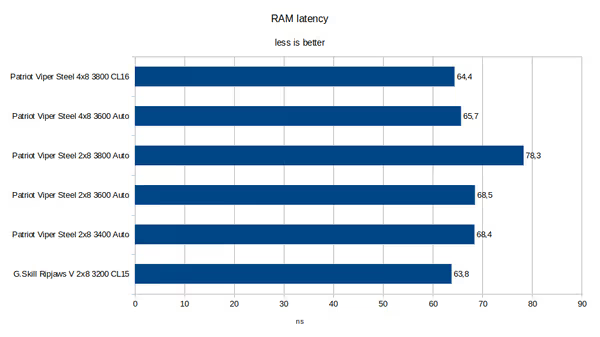

RAM benchmarks

The Patriot Viper Steel with XMP at 4400MHz CL19 isn't optimal for Ryzen. This high bin of Samsung B-die is intended for RAM OC pretty much. Even though the 2x8 GB kit is on the QVL list for the motherboard it did not boot when attempted with that XMP profile. Next auto-timing were tested at various frequencies. Final settings were timings given by the Ryzen DRAM calculator.

The auto timings were really slow/loose (like 3600 CL26) even though Superposition score doesn't change as much as a more CPU direct Time Spy CPU score. 4 sticks of single rank RAM are better than two. I you use two dual rank DIMMs (like some 16GB or bigger) then two should be the optimal choice.

Linux benchmarks

For Linux I used Phoronix benchmark suite. There was no problem running Linux on the Threadripper platform while Ryzen on Gigabyte B550 Aorus Master had some problems. First of all - 2.5Gbit Ethernet did not work on any tested Ubuntu version (20.04, 20.10 and daily build of 21.04). On LTS and 21.04 WiFi did work, while 20.10 and recent Manjaro Live USB did not fully boot stopping at semi-working terminal without desktop running. This isn't likely only related to RTX 3070 as the problem wasn't at the end of the boot process. Sadly couldn't investigate more at the time.

Benchmarks I've picked focused around latency and efficiency in specific workloads - to get a picture of how Zen 3 differs from Zen 2, Threadripper and Intel designs. You can check the raw results on openbenchmarking. Sadly I couldn't run NASA parallel benchmarks as they didn't compile on Ubuntu 21.04 due to to new

compiler - and those benchmarks did gave big differences for Ryzen 3500X vs i5-9400F before (some of them rely on inter-core communication latency and so on).

So lets start with quick comparison, both systems running the same 3200 MHz CL15 RAM:

And with 5900X running 3800 CL16 RAM:

Threadripper wins only in Stream memory bandwidth benchmarks due to quad channel memory, but 3800MHz RAM does cut that loss by a lot. Now lets look at some results in detail.

IPC_benchmark is a Linux inter-process communication benchmark. Here we see Intel noticeably behind it counterpart - 3500X. 5900X is the clear winner while Threadripper is the worst among AMD CPUs.

Core-Latency is a test of core-latency, which measures the latency between all core combinations on the system processor. Here Intel also clearly wins with Zen 3 showing some improvement over Zen 2 (and TR4 far behind)

Pmbench is a benchmark aimed to profile system paging and memory subsystem performance. This test profile will report the average page latency of the system. We can see monolithic Intel design winning while Zen 3 very close by. Older Zen 2 and TR4 CPUs with higher latency get much worse scores:

In Botan cryptography benchmarks 5900X wins in both AES-256 and Blowfish cyphers. It's kind of odd that 12-core 1920X achieves nearly same score as 6-core CPUs. Similarly in the AOMedia AV1 encoder as well as VP9/WebM conversion benchmark the 1920X looses versus all CPUs while 5900X wins by some margin depending on settings used:

In Kvazaar, an CPU-based H.265 video encoder, Threadripper regains some performance while Intel struggles here. Maybe this benchmarks likes larger amounts of cache on the CPU?:

7-Zip compression benchmark looks more like something we would expect. It scales with amount of cores so 12-core win with 6-core ones. 5900X does improve by a lot over the older Threadripper:

Compilation benchmarks are tricky as some projects like Linux Kernel scale really well with core count and cache size ending up being a cache benchmark and not so much a CPU performance metric. Here we have two examples:

To end the Linux benchmarks overview let's look at Blender rendering, which is an all-core load. 12-core wins with 6-core while Zen 3 is the clear winner:

Aside of some rough edges with the newer B550 motherboard the Zen 3 Linux performance is really good. We can see noticeable improvements over previous generations. Those that do need Threadripper features will have to wait for the Zen 3 based versions, where as even 5900X can handle various compute and development workloads easily.

The new CCD/CCX design does improve latency although in extreme tests the monolithic Intel design still wins. This is less likely to translate into performance but for complex compute workloads in some edge case scenarios it may matter.

Conclusions

With Zen 2 going up to 16-cores it was obvious that some HEDT/workstation tasks reserved for more expensive higher end parts will go into the consumer

row. For pure gaming 3900X and 3950X weren't the most optimal choice when considering the price but if you streamed/made some videos or used the PC also for some heavy duty work then such CPU was a cheaper-Threadripper option.

After 3 years of development that passed between 1920X and 5900X launches the performance improvement is quite sizable. We get around 60% more performance and new features like PCIe 4.0, newer USB standards and even Thunderbolt 3 on some of the AM4 motherboards.

Also gaming performance got a nice performance boost. The design changes that decreased the latency as well as IPC and clocks increases all benefited this performance metric. There are still cases where Intel will be better but there should be no outliers where you loose noticeable amount on any of the platforms.

If you have tasks that can use 12 or 16 cores then go for the high-end 5900X and 5950X chips, if not then just save some money and go with 5600X/5800X... but that all depends on what's in stock.

Comment article