Testing AMD Amuse - local image generation with Stable Diffusion AI models

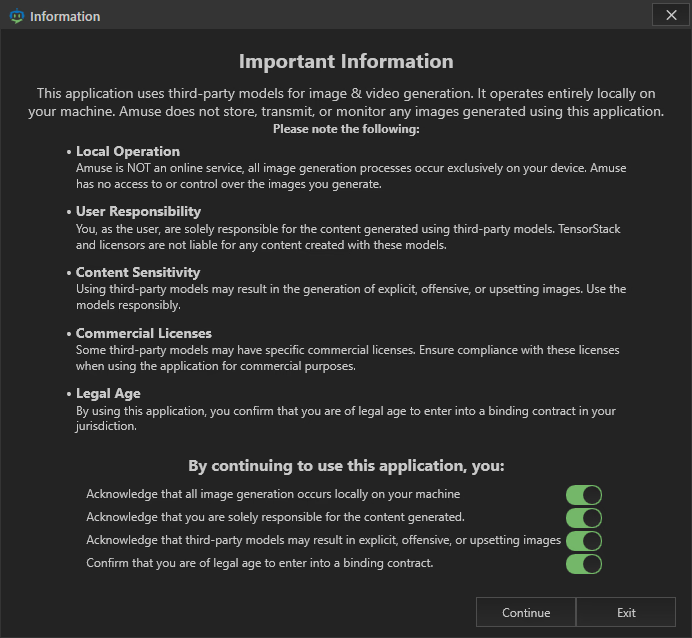

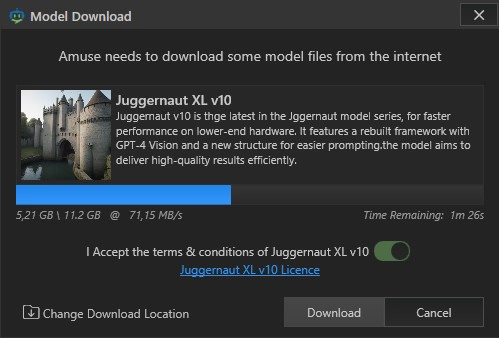

Amuse is a desktop application made by AMD and Tensorstack.ai that allows you to generate images locally using various Stable Diffusion AI models. It can be downloaded for free from amuse-ai.com.

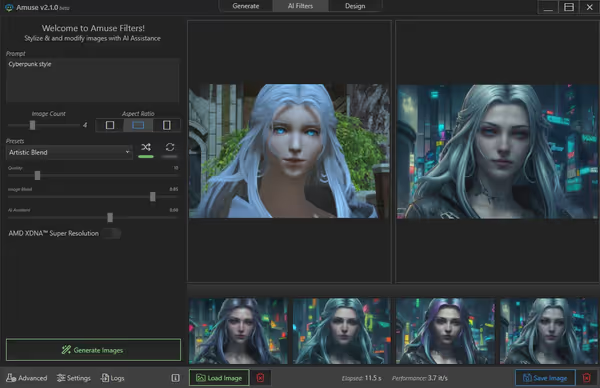

Amuse application

The application is a showcase of running AI on AMD hardware, but it can be used for simple image generation for various needs. It requires around 32GB of RAM and a video card with enough VRAM. The highest quality/most advanced Flux.1 model requires 24GB VRAM - Radeon 7900 XTX.

Amuse will independently download required models and generate images based on our prompt and settings. It works rather well, but I had to restart it a few times after some settings changes when it started generating random images ignoring the source image. On my Ryzen 9 5900X and Radeon 6950 XT system lower quality models generated images near instantly while higher-quality images took 15 seconds or a bit more per image.

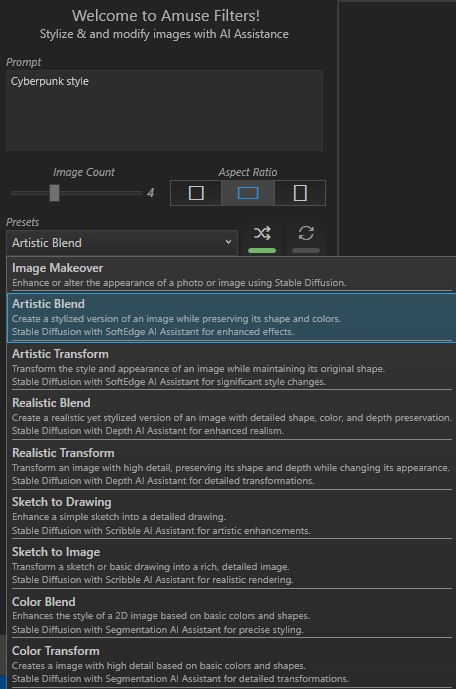

Image generation options

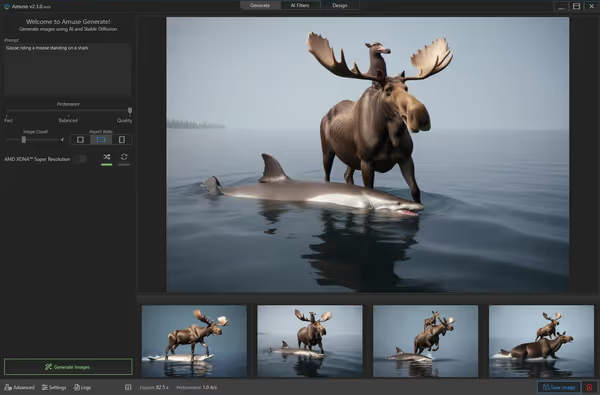

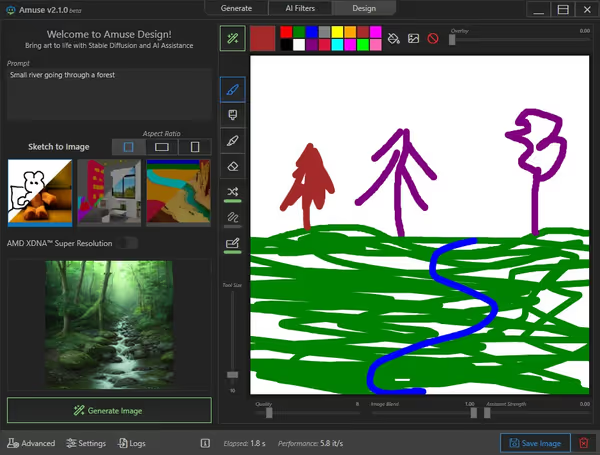

The app has 3 main ways to generate images. The first is simple prompt-based - you describe what you want and the model will try to generate that... The other is prompt + simple drawing and the third is generating an altered version based on the source image - changing style, colors, and more.

To convert an image you will have to select the aspect ratio and then use the prompt to describe the style you want the image to be generated in:

Drawing is a bit funky to get it to work but if you need something following a fixed layout then you may try it out:

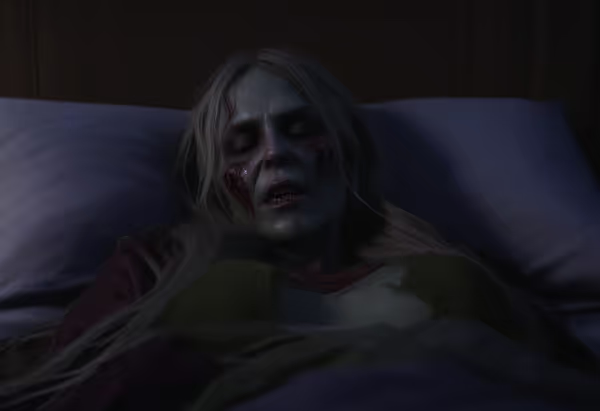

Models limitations

Most of the models are quite simple compared to those running on high-end AI servers. The images will be small while the model will often have problems with text and realism (body parts for example). At least AMD Amuse offers an option to upscale the images.

Lowest quality is quicker to generate but also the resulting image isn't very refined:

Those don't look like actual Murlocs but are still somewhat close on the creature type.

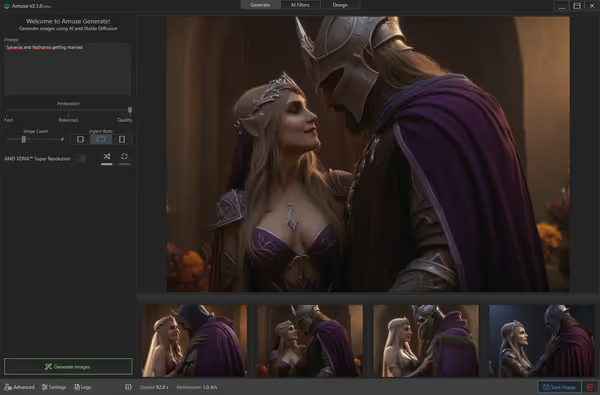

Game IP specific prompts

I used some World of Warcraft-specific prompts and the models can generate somewhat accurate (or at least interesting) images. This means the models were trained on some of the game artwork and know Sylvanas or Thrall to some extent.

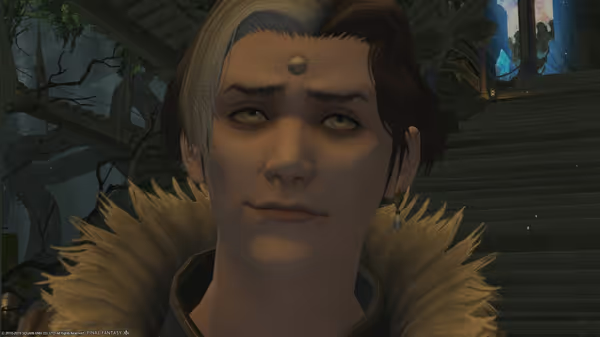

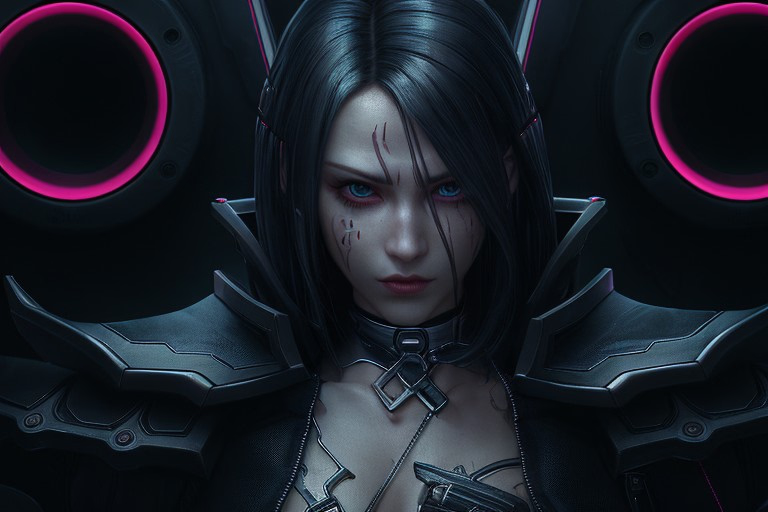

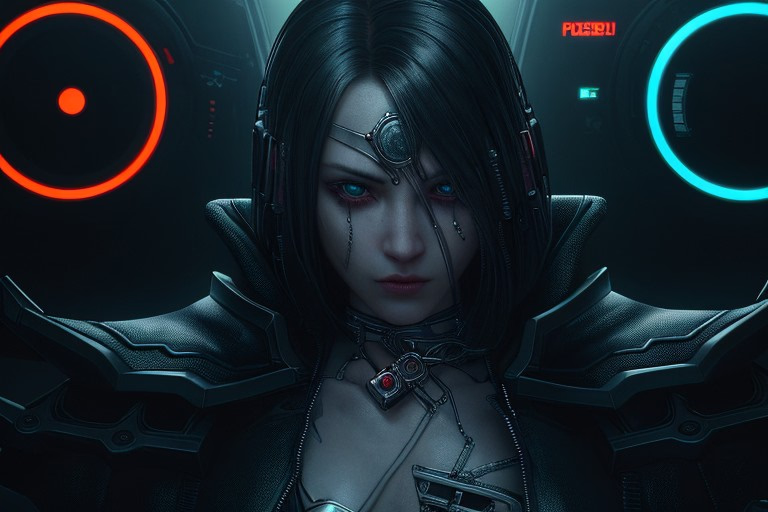

Cyberpunk 2077 is recognized while Final Fantasy XIV is not (same as Steve from GamersNexus

):

More direct prompts tend to work better although combining multiple objects can be a problem.

Image style alteration

The app offers multiple blend options for source images. You select the image and then use the prompt to describe the style to blend it.

FFXIV Emet-Selch blends

WoW Thrall blends

WoW Xal'atath blends

Comment article