Design and capabilities of multi-CPU computers

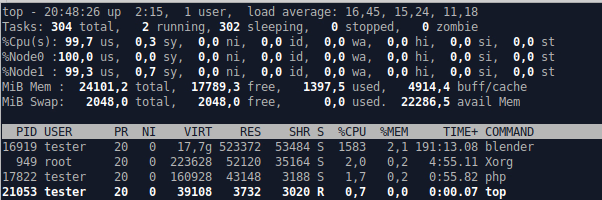

There are tasks like video rendering that can take many hours on a good CPU. The final total time needed depends on the CPU performance - how many cores it has, what frequency it runs at, what is it IPC and so on. Many tasks can use multiple cores of a CPU to finish the job faster while the CPU can only have a finite amount of cores due to size or design limits. To overcome this problem servers and workstations just use more than one processor in one system. It adds complexity but provides much more cores for the task to run on. Lets check how multi-CPU systems work and how you can play with one yourself by using an old cheap workstation motherboard.

In this article I'll highlight some technical terms and principles on how multi-CPU systems work and how work is executed on them. After that I'll show cheap old LGA 1366 workstation/server motherboards with two 4 and 6-core Intel Xeon CPUs running Linux and Windows as well as general tips of getting one yourself. With the emergence of 6+ core CPUs from AMD and also Intel the dual-CPU old workstations lost a lot of their value, but still they can be used to play with a dual CPU system or for some cheap nerdish video processing and alike use PC.

Multi-CPU platforms

Support for running multiple CPUs was and is reserved for top of the line Intel Xeon and AMD Opteron (now Epyc) CPU lineups. In 2011 AMD while not having an efficient core design offered a 4 CPU, 16 core each systems with 64 cores in total. When games used quad core Sandy and Ivy Bridge CPUs Intel offered dual six core each Xeon servers and workstations at a very high premium.

A CPU can have only a limited amount of cores - either the silicon chip gets to big to manufacture for an acceptable price or the design can't handle more cores (each core in a CPU must communicate with each other, RAM and so on). To avoid this limitations and give more processing powers multi-CPU systems were born. It adds an extra layer of complexity but solves a lot of performance problems single-CPU servers had.

When you have more than one CPU they have to communicate with each other. Intel had QPI (QuickPath Interconnect) and now UPI - UltraPath Interconnect. AMD had HyperTransport in their Opterons and now uses Infinity Fabric in multiple processors, not only for multi-CPU setups.

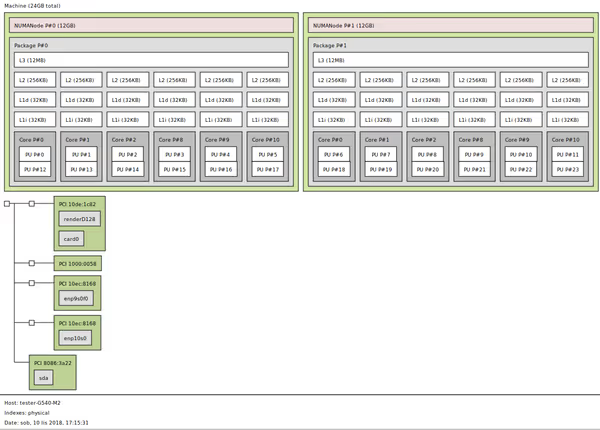

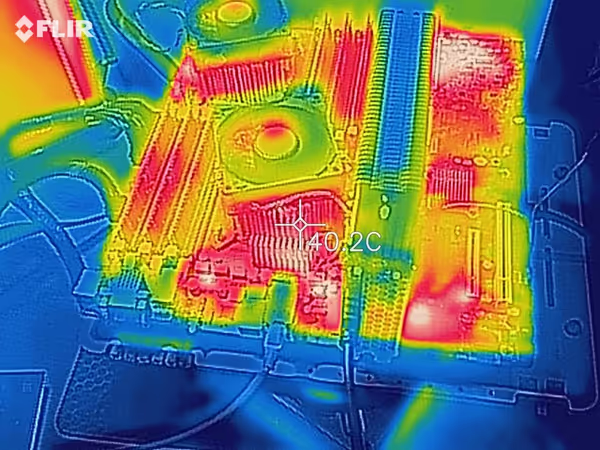

CPUs also need RAM at a very fast connection. Dual or even quad channel RAM for consumer systems just shows how essential this RAM-CPU connection is. With multi-CPU configurations it become obvious that it's impossible to connect every CPU to one memory controller. And so a NUMA architecture was born - Non-Uniform Memory Access. Each CPU has it own RAM and uses it without any changes. Other CPUs can also access it via the CPU-CPU connection at a performance penalty.

CPU-CPU link and NUMA architecture are the core pillars of multi-CPU systems. This allows scaling workloads on multiple cores of multiple CPUs. When you have 64 Opteron cores and like 48 GB of RAM (12 * 4) then possibilities are quite broad. The operating system then fills in the last element of this layer of complexity - it manages what tasks are executed on which CPU. It would be bad to use RAM from one CPU and execute it on another. Each multi-CPU platform has it own quirks and for serious workloads software developers and server admins put extra effort to optimize this work distribution.

In case of Threadripper 2990WX with 32 cores it gets even more tricky. Even as it's a one CPU it's made of four chiplets, each housing part of the cores. In this CPU which works in NUMA architecture, cores on two chiplets have direct RAM access, while cores on two other chiplets do not - so there are some better

and worse

cores when it comes to memory access. Optimizing workloads for such CPU can be even more challenging - NUMA architectures aren't easy.

Operating system and the NUMA architecture

If you have a multi-CPU PC you can install a server or 10 Pro version of Windows or just a modern Linux distribution and take advantage of those CPUs. At first it will look like a normal PC, just with more cores. But when you get deep in technical details you will see how the work is handled and you may even start trying plan it better than the operating system is capable of.

A CPU (or a group of cores) in NUMA architecture is called a node and you will see this term in many apps and system tools. You can check node load or list of running processes or tell the OS to run this thread on this node. Each node must be connected to the rest of the system with a cache-coherent interconnect bus (QPI, Hypertransport, Infinity Fabric).

The OS will try to run a thread on a node on which memory was allocated for it (most optimal) but if it's not possible it will execute it on a different node. Depending on operating system used you will have libraries and tools to check or modify how this decision is being made.

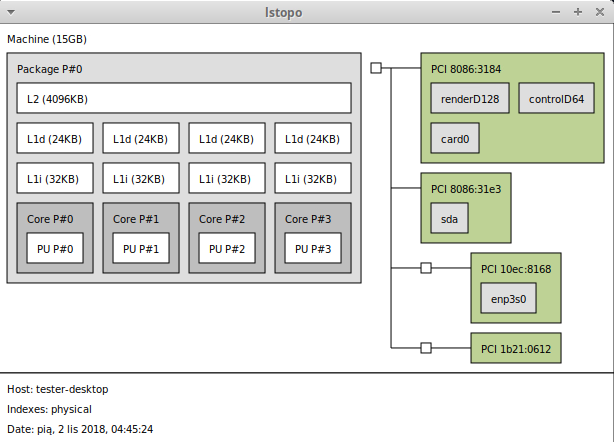

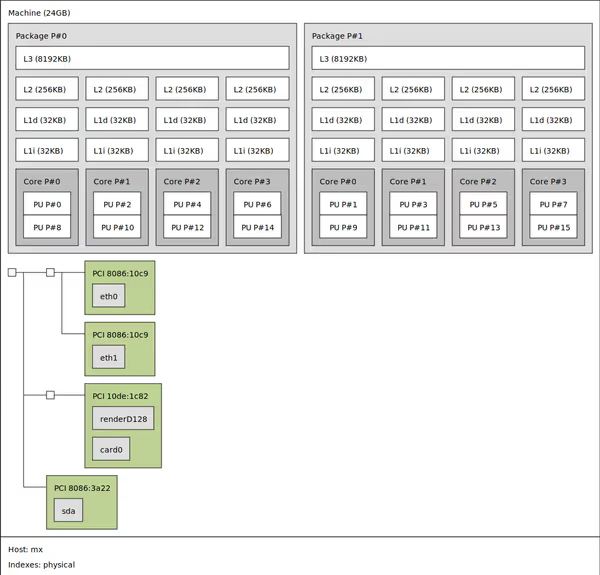

Collection of all the nodes and other system components (like graphics cards) creates a system topology - a map of all nodes and what extra those nodes have access to.

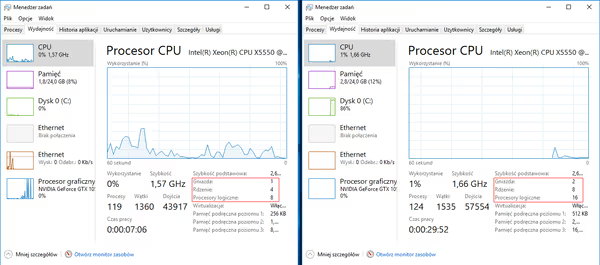

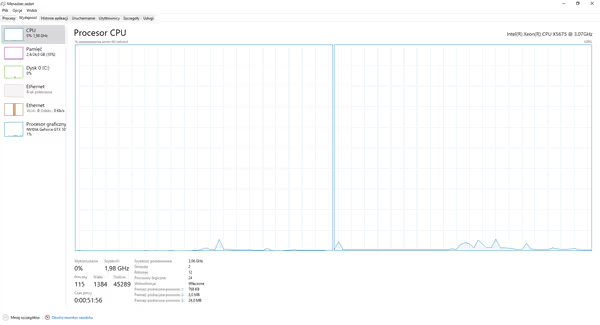

MS Windows

For Windows 10 you will need the Pro version for multi CPU support, while Home will only show and use one CPU. Aside of that there are server versions of Windows.

A software developer or a server admin tasked with deploying given workload on a Windows based system with multiple CPUs will be able to use some of Windows tools to manipulate how tasks are spread across multiple CPUs and their cores.

Thread affinity forces a thread to run on a specific subset of processors. Setting thread affinity should generally be avoided, because it can interfere with the schedulers ability to schedule threads effectively across processors. This can decrease the performance gains produced by parallel processing. An appropriate use of thread affinity is testing each processor.

When you specify a thread ideal processor, the scheduler runs the thread on the specified processor when possible. This does not guarantee that the ideal processor will be chosen but provides a useful hint to the scheduler.

More on:

- docs.microsoft.com - multi-CPU systems

- docs.microsoft.com - NUMA support

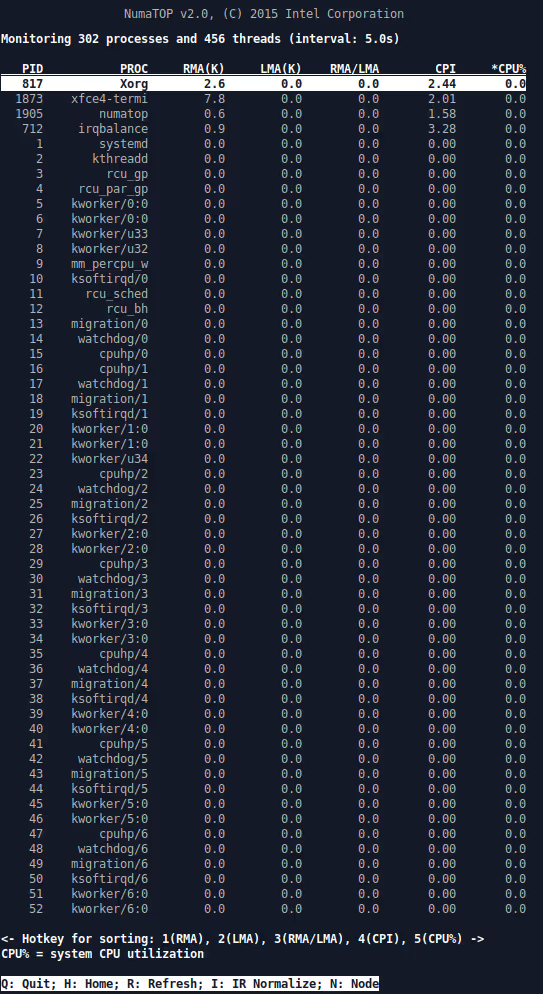

Linux

A lot of what have been said for Windows is also true for Linux. The API or tools a different but the idea stay the same. We have the Linux Kernel support as well as libnuma

library in the userspace as well as numactl

tool that can be used to manipulate thread affinity. lstopo

is an application that draws a graph of our system topology - what is where and so on. Even the standard top

command showing running processes and CPU utilisation can be switched to NUMA Nodes mode - by using the 2

key (1 - each core, 2 - each NUMA node).

Multi-CPU systems for mortals, gamers and content creators

Modern multi-CPU systems are way to expensive for personal use but older generations are to ineffective for the premium server and workstation use so they end up on ebay and similar sites where we can pick them, usually for cheap. A pair of two six core LGA 1366 CPUs for even under $100 in total, motherboard for similar amount and we are good to go. Just some old server ECC DDR3 RAM and the system is ready. For a more powerful and more modern LGA 2011 platform (and two eight core CPUs) you will pay noticeably more ($500+ on Aliexpress for a motherboard, two CPUs and RAM combo).

Before the era of only quad cores

from Intel was shattered some YouTube content creators used such after-market workstations to process their videos. Even though single core of old CPUs wasn't great the amount of cores made it much better than what the best quad core CPU could offer. Right now with emergence of 6-8 and maybe even 16 core consumer CPUs use for those old multi-CPU workstations is fading away.

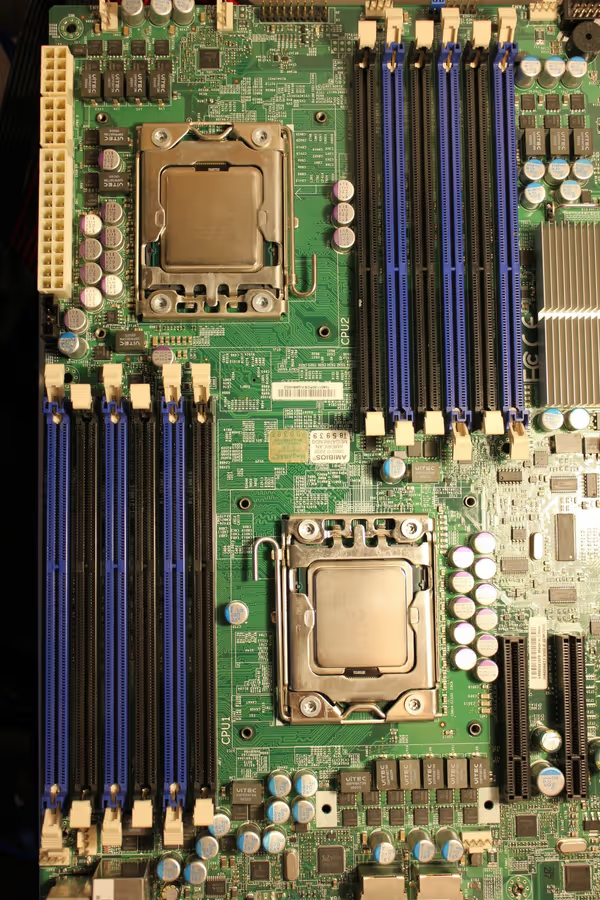

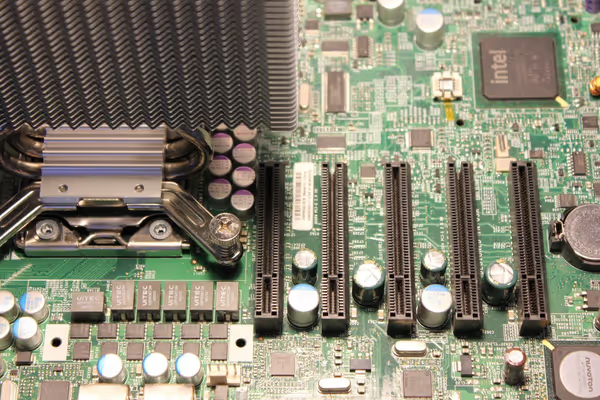

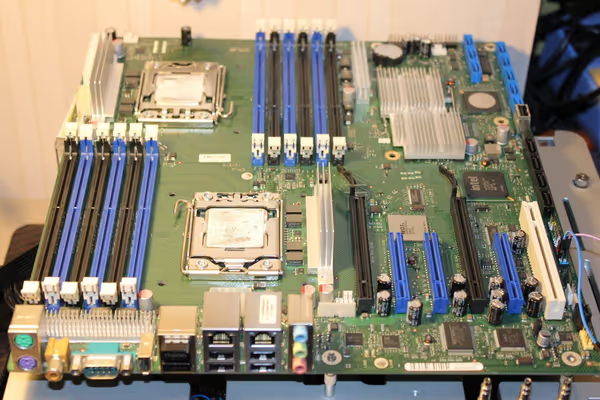

Below I'll showcase some old LGA 1366 Intel dual Xeon setups with quad and Westmere six core CPUs in three motherboards from that era. When looking for one you may find different ones and those may be fine too. What you have to look for are things like ATX power supply compatibility - custom server boards won't have ATX connectors and if they do check if they are standard ATX connectors (and you will need two 8-pin CPU connectors aside of the 24-pin ATX connector - not every power supply has two CPU 8-pin connectors available). And when you find a nice looking board - google it documentation, check what CPU and RAM it supports as server/workstation hardware has very fixed and limited components support. In the end you will need correct ECC RAM and beefy power supply. The case will also have to handle it - an ATX or eATX big motherboard.

There are also AMD Opteron processors for cheap, you may be tempted to build 4 x 16 cores system - just note that G34 based boards for those Opterons are rare, more expensive and the Opterons run at low frequency - so for things like gaming the performance will be very low. It will only scale for tasks that can use large amount of cores (somewhat).

Performance and compatibility

6-core LGA 1366 5600-series Westmere CPUs are relatives of Sandy and Ivy Bridge. Right now those processor families aren't state of the art in terms of performance. System based on those CPUs won't be best for gaming, it will be ok

at best, and likely quite good

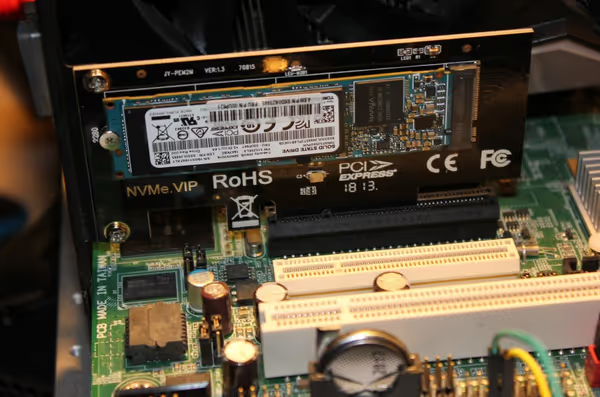

for multitasking. Also note that it won't have USB 3 support and SATA connectors are SATA II only so your SATA III SSD or HDD will work slower. You can add a PCIe SATA III or M.2. NVMe adapters but in case of NVMe - you won't be able to boot from (SATA drive needed).

Games don't scale with NUMA architecture and may even not work properly. If you want some gaming then be sure to check what frequency the CPU is running at. Many Xeon models uses low clocks and would be quite bad for desktop use. I would advise 3 GHz and up.

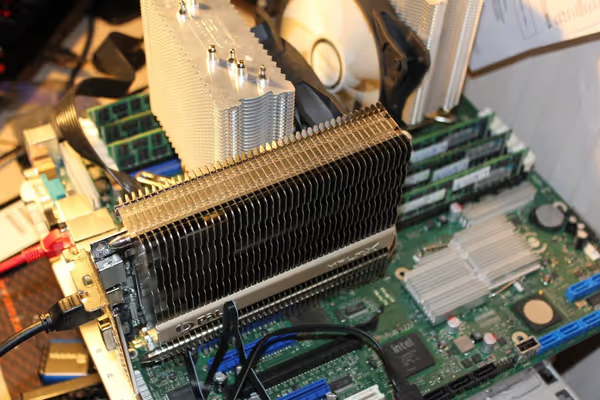

You can install nearly any modern 64-bit Linux distribution as well as very likely but not officially - Windows 10 Pro. You will also need a dedicated GPU for it - be sure to pick a motherboard with PCIe connectors. Also note that on some boards longer graphics cards may hit some components and will not fit directly (riser cable would be needed). Shorter GPUs like R9 Nano or Zotac GTX 1080 Ti Mini should be way more compatible.

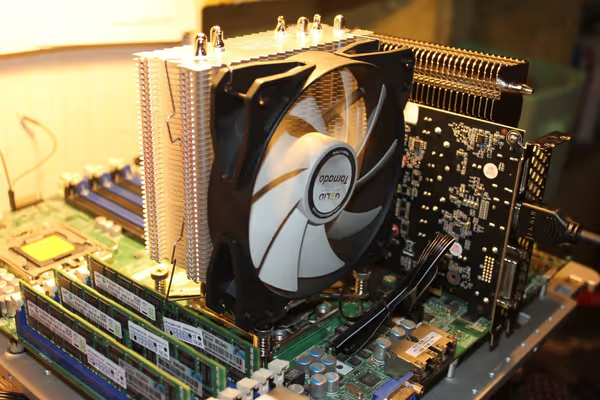

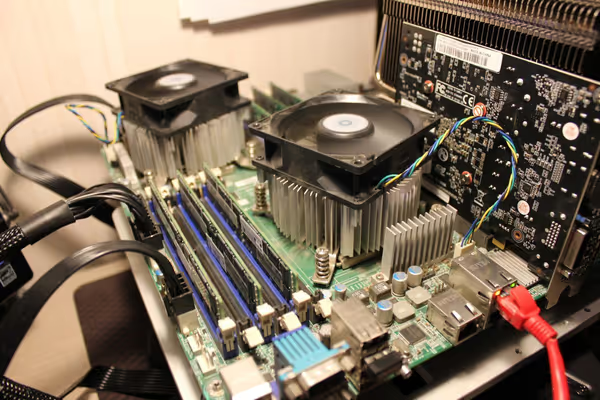

If you buy a full set you may get a server type cooling system - either a radiator and fans on CPUs or big radiators on CPUs, a rack case and fans in the case. Those server cooling solutions will be very loud, I advise replacing them with LGA 1366 consumer cooling.

Gigabyte GA-7TESH2-RH

A Gigabyte motherboard from Acer workstation with two quad core X550 CPUs. Officially it doesn't support never six-core 5600 CPUs but at least it worked flawlessly for me.

- 3DMark Time Spy 2 x X5550: 2 595 (2 408 GPU, 4 648 CPU)

- 3DMark PCMark 10 2 x X5550: 3 446

- Passmark 2 x X5550: 3 183

- CPU-Z 2 x X5550: 2722 (MT)

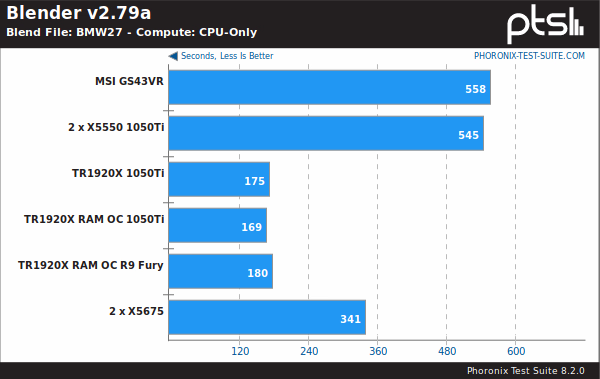

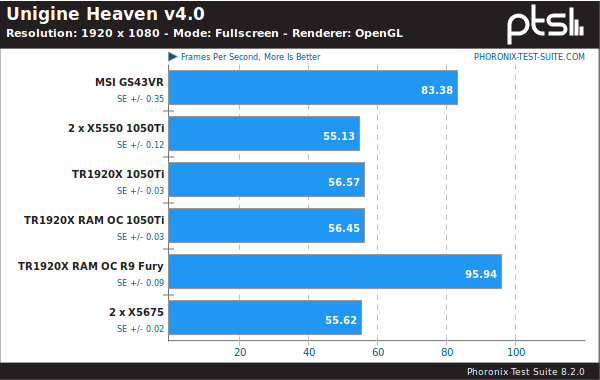

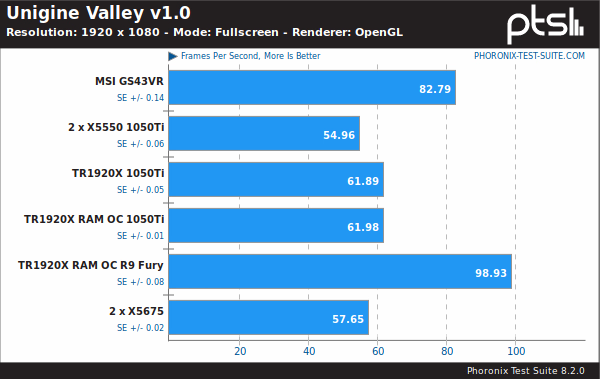

- Phoronix Linux benchmarks 2 x X5550 part 1

- Phoronix Linux benchmarks 2 x X5550 part 2

Supermicro X8DTE-F

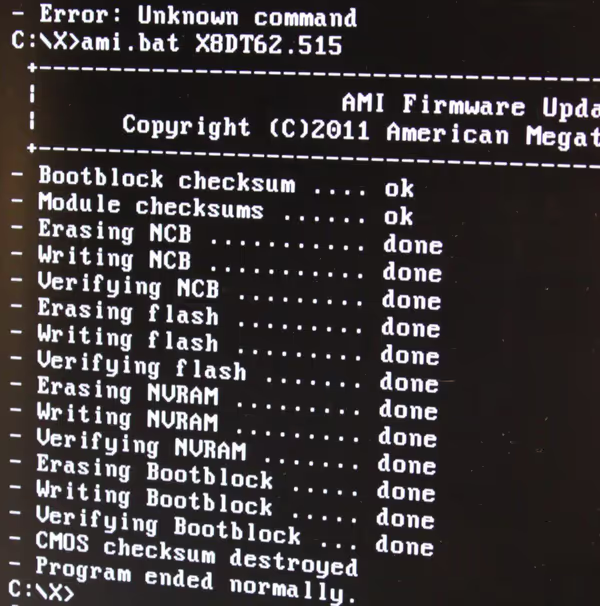

A server board that should support 5500 and 5600 CPUs. In my case the unit I had booted only with a 5500 CPU. Did not want to boot with 5600 six cores in any configuration. Even BIOS update (which can only be done on DOS/FreeDOS) did not help either.

- Passmark 1 x L5520: 1 928

- 3DMark Time Spy 1 x L5520: 2 212 (2 387 GPU, 1 566 CPU)

- CPU-Z 1 x L5520: 825 (MT)

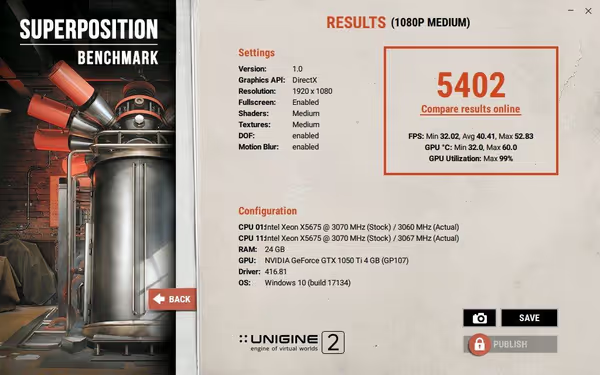

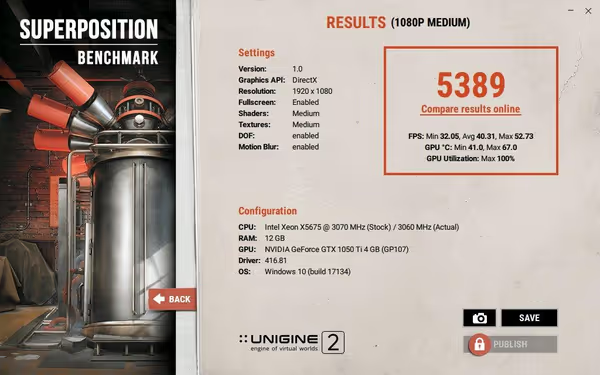

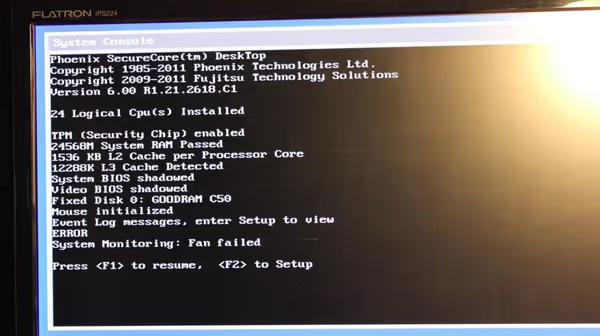

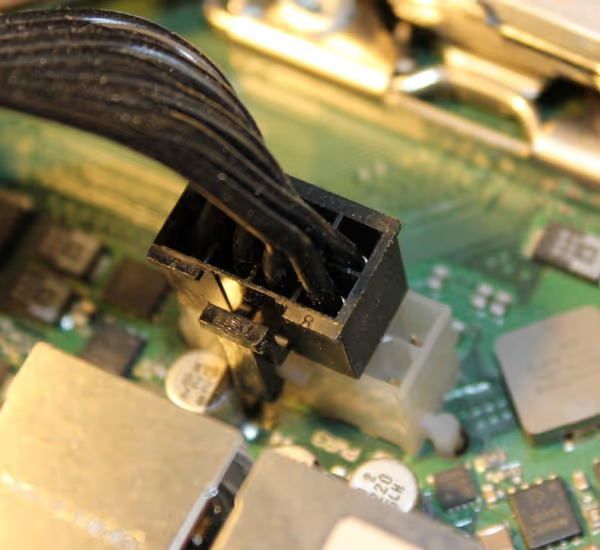

Fujitsu D2618-C14 (Celsius R670)

A workstation board. Even while it has a 10-pin CPU connector it still works with standard 8-pin connected. It handles two 6-core Westmere CPUs without problems. The boot process is long and it bugs about missing fans.

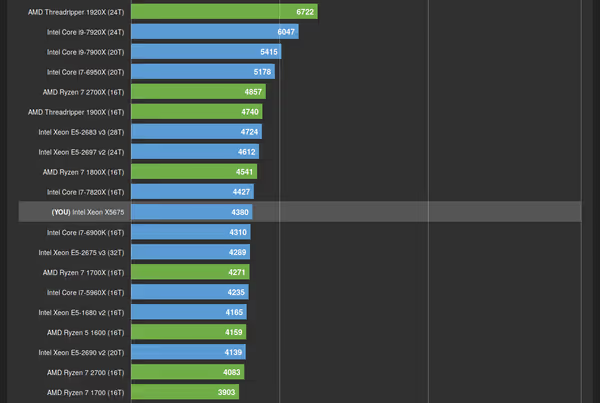

- CPU-Z 2 x X5675: 4380 (MT)

- 3DMark Time Spy 2 x X5675: 2 639 (2 390 GPU, 6 459 CPU)

- 3DMark Time Spy 1 x X5675: 2 524 (2 385 GPU, 3 774 CPU)

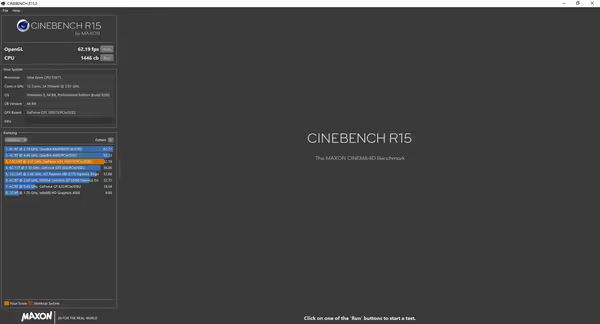

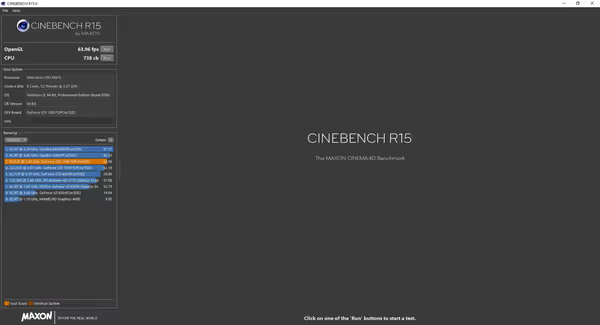

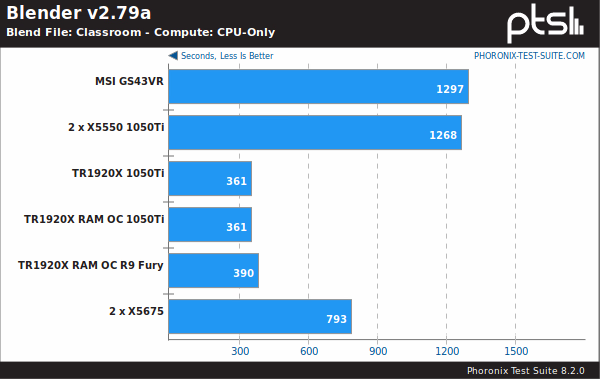

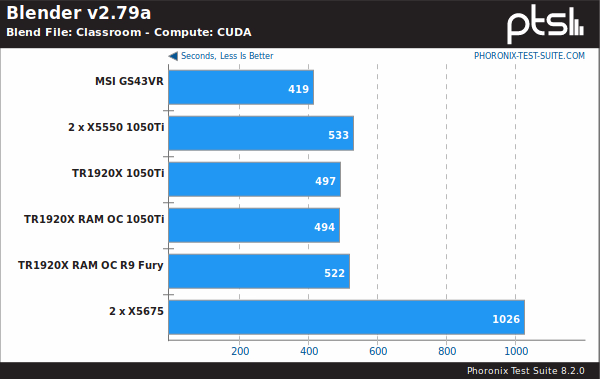

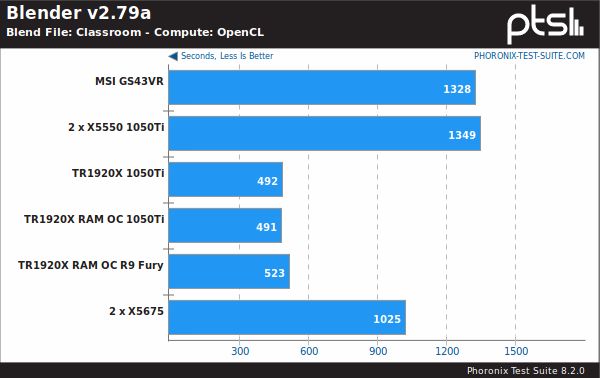

Performance comparison

Summary

A CPU from around 2011 won't be a cool piece of technology in 2019. There are some benchmarks where it still works on an acceptable level but LGA 1366 is slowly becoming irrelevant. At very low price you can use it for NUMA architecture experiments or for some nerdish multi-core and multi-CPU workloads.

Games won't benefit from two CPUs but you can try move other tasks like recording or streaming to separate CPU to observe how that affects performance and so on. Top gaming won't be possible on LGA 1366, maybe LGA 2011, but still some fun could be had there.

Comment article