Designing a sentiment analysis application for TripAdvisor reviews in Python

Sentiment analysis allows us to quantify subjectivity and polarity of text - of a review, comment and alike. Subjectivity scores a phrase between fact and opinion while polarity scores negative to positive context. In this article I'll show you how to use it to score TripAdvisor reviews but also how to design and implement a simple application with clean code in mind.

Sentiment analysis and Python

Wikipedia can give you a good definition. I'll focus more on practical aspects of it. To do such analysis on English (and French, German with plugins) text we can use textblob package. It's as simple as:

from textblob import TextBlob

TextBlob("This is very good!").sentiment

TextBlob("This is very bad!").sentiment

TextBlob("This isn't that bad!").sentimentTextBlob sentiment

property returns a tuple containing polarity and subjectivity. Polarity is a number from [-1.0, 1.0] range where 1 is positive, -1 is negative. subjectivity is a number from [0.0, 1.0] where 0 is fact and 1 is opinion.

>>> TextBlob("This is very good!").sentiment

Sentiment(polarity=1.0, subjectivity=0.7800000000000001)

Polarity 1 so it was rated as very positive and with high subjectivity it was treated as a more of an opinion than a fact.

>>> TextBlob("This is very bad!").sentiment

Sentiment(polarity=-1.0, subjectivity=0.8666666666666667)

In this case we got -1 so very bad indeed.

>>> TextBlob("This isn't that bad!").sentiment

Sentiment(polarity=-0.8749999999999998, subjectivity=0.6666666666666666)

With no context TextBlob still rated it negatively. What actually isn't that bad

means? Better than expected? Worse than expected? Hard to tell and to get a more useful quantification we need sentences with enough context.

Review sentiment analysis

Reviews, comments, customer messages, social media posts on some topic are good target for sentiment analysis. It can allow us to determine if people like or dislike the topic and how emotional they are. The more advanced analysis the more valuable and precise data can be extracted to then help make the correct decisions that would help improve your business and alike.

For this example I've picked TripAdvisor reviews. People like to check reviews and ratings of hotels and other venues before they visit it. In a TripAdvisor review aside of text comment user gives a numerical rating but that rating may not tell the whole story. Having hundreds of reviews a hotel you could want to batch them, quickly find groups of customers that weren't happy and see what type of negativity they left in the review. This could be the job for sentiment analysis.

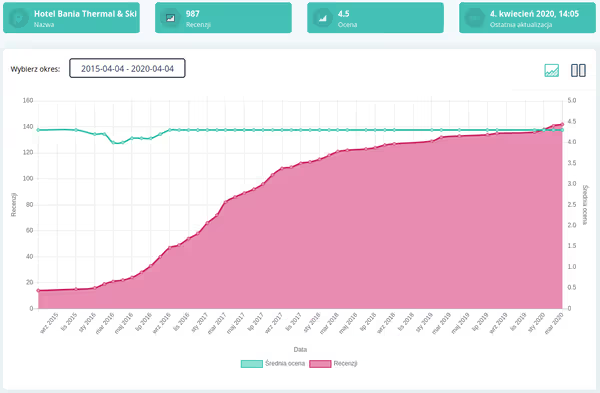

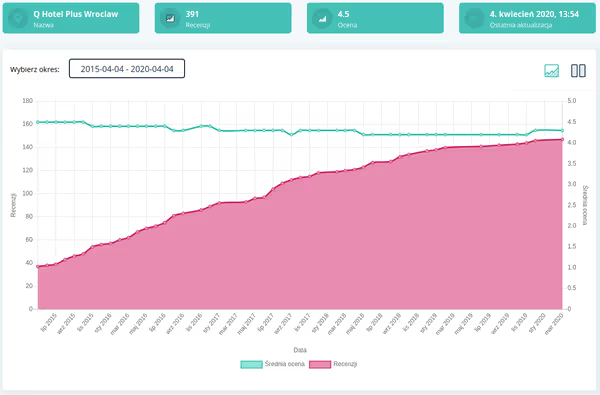

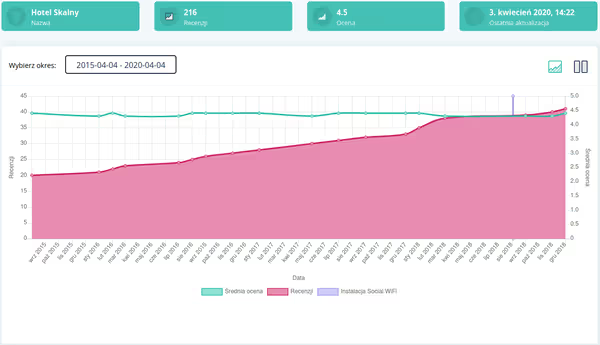

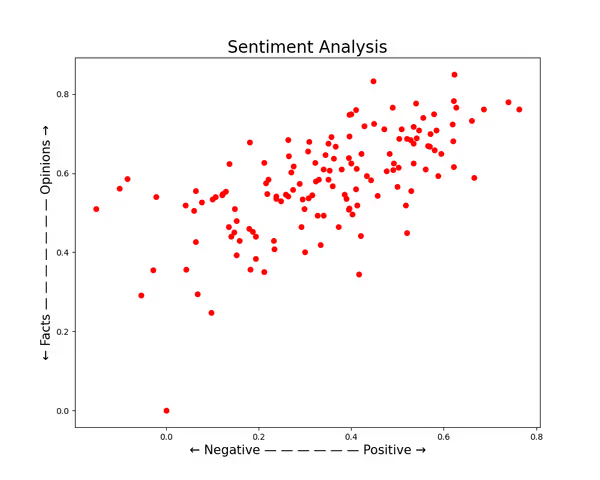

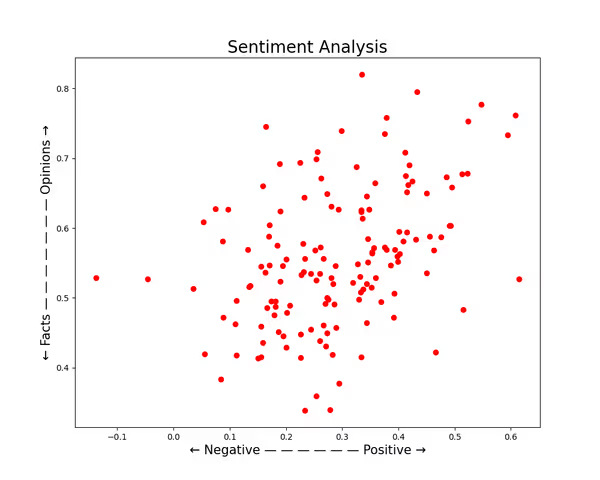

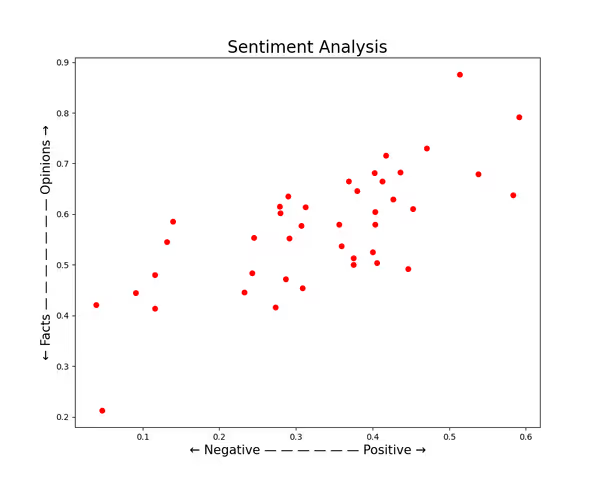

Let's look at 3 hotels from Poland. They are in different locations and function somewhat differently yet all of them have an average 4,5 score on TripAdvisor. When you look on the sentiment analysis you can notice that they do look different. We will go deeper into this later on as we implement our application. This is just to highlight how sentiment analysis can provide unique value from seemingly similar reviews.

|

|

|

|

|

|

TripAdvisor reviews sentiment analysis

The goal is to make an application that takes a JSON list of TripAdvisor reviews (fetched via third party app) and parses them to generate a chart or a simple table of reviews and their polarity and subjectivity scores.

As you could see getting the values is one line of code but to get from that to a small application that is functional, works correctly and can be extended upon, modified easily is a not so straightforward task. The code must be clean, easy to read and understand, covered by tests and must provide a good user experience on the frontend – result of such app will used by the end users - someone at marketing, customer service, not a developer.

The end result can be found on github.

Planning

- I have JSON files with TripAdvisor reviews from few hotels

- I have to parse those files and return a Python data structure containing data I need - like ID, text, review score

- Then I have to add subjectivity and polarity to each review on the list

- Having such data set I can display those scores in multiple ways

- I could make a scatter chart (point chart) with X/Y axis as polarity/subjectivity

- I could just make a HTML table that lists reviews and the scores

- Or as a future task - make an interactive point chart in JS showing review in a tooltip when hovered on a point on a chart

- Or create an Excel (sheet) file instead of HTML table...

So I have a list of things I have to do and things I would want to do. Each task can be a separate Python function, class, module or much more - depending on needs. If you plan your tasks it may be easier to then plan your code, track time and progress of your project. So let start.

I used Social WiFi external reviews system to fetch public TripAdvisor reviews and return them as JSON files for this project. This tool isn't public but you can generate data in same JSON format or change the code to handle your data structure. Social WiFi offers a Captive Portal solution for public WiFi networks and allows venue - customer interactions via own reviews, feedback forms, email automation and whatnot. Even as it's not a review site like TripAdvisor but a third part business you could already see how technologies like sentiment analysis could provide additional value to it - if properly used.

Input data

The data is a JSON file containing a list of TA reviews (with some data I don't need). The core structure looks like so:

{"data": [

{

"id":"some id",

"attributes":{

"text":"text here",

"rating": rating here

}

},

...

]}So the goal is to load this data via Python json

module and then return a Python data structure that is easy to work on.

Here is a simple class that will do that for us:

import json

class ReviewFactory:

def __init__(self, json_file):

self.json_file = json_file

def get_reviews(self):

json_data = self._get_raw_json()

for review in json_data['data']:

yield {'id': review['id'], 'text': review['attributes']['text'], 'rating': review['attributes']['rating']}

def _get_raw_json(self):

file = open(self.json_file)

return json.loads(file.read())Factory is a suffix often used for classes that produce some sort of data

. In this case we give it a json file and it returns reviews.

The main method is get_reviews that returns a generator - which for our usage is similar to a list. It could look like this:

def get_reviews(self):

json_data = self._get_raw_json()

data = []

for review in json_data['data']:

data.append({'id': review['id'], 'text': review['attributes']['text'], 'rating': review['attributes']['rating']})

return dataBut as you can see few extra lines of code can be avoided using yield

to return dictionaries as we go through the loop. Less cluttered code the better.

Then we have the _get_raw_json method which opens a file and returns parsed JSON. This class takes a path/filename of a JSON file.

The question may be why such class and not just few lines of code in one function or even without one? To have clean code. The JSON file contains more data than needed so we can clean it by returning only needed data in a defined structure instead of a dictionary from the JSON loop containing more data and potentially unexpected structure.

The _get_raw_json

method does one task and it's not related to getting reviews so it was moved to separate named method. Naming variables, methods, functions gives you an opportunity to make the code easy to understand, especially when you will return to it after some time or when you are working on code written by other developers.

With clean architecture also testing is easier. We can test smaller pieces of code which I'll show later on. But let's work a bit on that class as it can be made even better - even though it already works.

A dictionary is not the best data structure when we have fixed and mandatory data structure. When you get a dictionary as an output you don't really know what fields it must has, can have and alike. The code isn't providing this information so we would have to document it - or write code that would not need it.

Python in the collections module has a namedtuple which allows creating class data structures:

from collections import namedtuple

TripAdvisorReview = namedtuple('TripAdvisorReview', ['id', 'text', 'rating'])

a_review = TripAdvisorReview(1, 'tomato', 4)We used a namedtuple to define a TripAdvisorReview class with 3 required parameters - id, text and rating. Such class is a sort of a contract - we are stating what TripAdvisorReview is and what attributes it has.

Our get_reviews

method can now look like this:

def get_reviews(self):

json_data = self._get_raw_json()

for review in json_data['data']:

yield TripAdvisorReview(review['id'], review['attributes']['text'], review['attributes']['rating'])When you see such code you can navigate to the definition of TripAdvisorReview quickly in an IDE. That's quite handy and functions as a self-documenting code.

There is more that we can do. A class/namedtuple isn't the best data type to do operations on. We will have to add polarity and subjectivity and then visualize in different ways. For data analysis pandas can be used:

import json

from collections import namedtuple

import pandas

TripAdvisorReview = namedtuple('TripAdvisorReview', ['id', 'text', 'rating'])

class ReviewFactory:

def __init__(self, json_file):

self.json_file = json_file

def get_reviews(self):

raw_data = self._get_raw_data()

return pandas.DataFrame(raw_data, columns=['id', 'text', 'rating'])

def _get_raw_data(self):

json_data = self._get_raw_json()

for review in json_data['data']:

yield TripAdvisorReview(review['id'], review['attributes']['text'], review['attributes']['rating'])

def _get_raw_json(self):

file = open(self.json_file)

return json.loads(file.read())Here instead of returning the generator we pass it to pandas.DataFrame which is somewhat like an Excel sheet - you can do operations on columns, extend and convert the data, save it as an actual sheet, XML, JSON and whatnot.

So we have a class that returns the data from a JSON file. We can write some tests, for example:

from unittest import mock

from libraries import analysis

class TestReviewFactory:

def test_if_reviews_are_returned(self):

factory = analysis.ReviewFactory('tests/test_data.json')

result = factory.get_reviews()

expected = [

{

'id': '122566874',

'text': 'I liked the music and the view was relaxing.',

'rating': 4,

},

{

'id': '122566875',

'text': 'Rooms of high standard with good service and good staff. Excellent restaurant.',

'rating': 3,

},

]

assert result.to_dict(orient='records') == expected

@mock.patch('libraries.analysis.ReviewFactory._get_raw_json')

def test_if_reviews_are_built_from_json(self, raw_json):

raw_json.return_value = {"data": [

{

"type": "tripadvisor-reviews",

"id": "122566874",

"attributes": {

"reviewer-name": "Tomato",

"tripadvisor-id": 123,

"text": "I liked the music and the view was relaxing.",

"reviewed-at": "2012-01-01",

"rating": 4

}

},

]}

factory = analysis.ReviewFactory(None)

result = list(factory._get_raw_data())

expected = [

analysis.TripAdvisorReview("122566874", "I liked the music and the view was relaxing.", 4),

]

assert result == expectedThe test_if_reviews_are_returned is an integration test. We pass it a prepared test file and we check if the output is as we expect it (we know what's in the JSON file).

The test_if_reviews_are_built_from_json is more of an unit test. We are checking if _get_raw_data method returns one TripAdvisorReview. The _get_raw_json method have been mocked - we assigned what it should return for that test so the only thing actually executed is the code of the tested method.

The tests are run with pytest, which is configured in the repository.

Performing sentiment analysis

So now we have the initial data ready. Now we have to add two columns with polarity and subjectivity - calculated for every review text.

To do so I've wrote another class that does that:

class SentimentAnalyzer:

def build_data_set(self, json_file):

data = self._get_data_frame(json_file)

data['polarity'] = data['text'].apply(self._get_polarity)

data['subjectivity'] = data['text'].apply(self._get_subjectivity)

return data

@staticmethod

def _get_data_frame(json_file):

factory = ReviewFactory(json_file)

return factory.get_reviews()

@staticmethod

def _get_polarity(phrase):

return TextBlob(phrase).sentiment.polarity

@staticmethod

def _get_subjectivity(phrase):

return TextBlob(phrase).sentiment.subjectivityIn this implementation ReviewFactory is used by the class instead of it output being passed to it (can be implemented both ways) - this was my design decision.

The main method is build_data_set which takes the JSON file path and passes it to ReviewFactory that returns Pandas DataFrame. We then add two columns - polarity and subjectivity:

data['polarity'] = data['text'].apply(self._get_polarity) data['subjectivity'] = data['text'].apply(self._get_subjectivity)

The data['text'].apply will apply a given method/function to every element in the text column. The result will be assigned to a new column. Quite handy.

For this class we can write some good tests:

class TestSentimentAnalyzer:

def test_if_data_set_is_returned(self):

analyzer = analysis.SentimentAnalyzer()

result = analyzer.build_data_set('tests/test_data.json')

expected = [

{

'id': '122566874',

'text': 'I liked the music and the view was relaxing.',

'rating': 4,

'polarity': 0.6,

'subjectivity': 0.8,

},

{

'id': '122566875',

'text': 'Rooms of high standard with good service and good staff. Excellent restaurant.',

'rating': 3,

'polarity': 0.512,

'subjectivity': 0.548,

},

]

assert result.to_dict(orient='records') == expected

@mock.patch('libraries.analysis.ReviewFactory')

def test_if_data_frame_factory_is_called(self, frame_factory):

analyzer = analysis.SentimentAnalyzer()

analyzer._get_data_frame('path/to/file.json')

assert frame_factory.called

calls = frame_factory.call_args_list

assert calls[0].args[0] == 'path/to/file.json'

def test_if_polarity_is_high_for_positive_phrase(self):

analyzer = analysis.SentimentAnalyzer()

result = analyzer._get_polarity('This is very good')

assert round(result, 2) == 0.91

def test_if_polarity_is_low_for_negative_phrase(self):

analyzer = analysis.SentimentAnalyzer()

result = analyzer._get_polarity('This is very bad')

assert round(result, 2) == -0.91

def test_if_subjectivity_is_low_for_objective_phrase(self):

analyzer = analysis.SentimentAnalyzer()

result = analyzer._get_subjectivity('Tom is a professional singer. Table was well made and rock solid.')

assert round(result, 2) == 0.1

def test_if_subjectivity_is_high_for_subjective_phrase(self):

analyzer = analysis.SentimentAnalyzer()

result = analyzer._get_subjectivity('I liked the concert, the music was very pleasing, I like the food')

assert round(result, 2) == 0.55The test_if_data_set_is_returned is an integration test that goes through both classes. As we have fixed phrases in the test JSON file we can test for exact values that should be returned.

The test_if_data_frame_factory_is_called is a simple test that mocks ReviewFactory and checks if method _get_data_frame

will call it. Mocking prevents the original class from executing.

The last 4 test check _get_polarity and _get_subjectivity methods. We pass a string and check if the value is as expected. To some extent it's testing the external TextBlob library and you should avoid writing tests that only test the external library - it should have tests for that. In this case those tests ensure each method returns matching value - either subjectivity or polarity. Mismatch can happen so such tests help with that.

Visualization - chart

DataFrame from Pandas can be saved as Excel xlsx file and more. But we can do some of our own. First let's draw a scatter chart of all the reviews. To make a chart in Python, especially a scientific one a matplotlib library would often be used. Here is a class that can make a chart based on our DataFrame:

import matplotlib.pyplot as plot

class SentimentPlotter:

def __init__(self, data_set):

self.data_set = data_set

def draw(self, chart_name='sentiment.png'):

self._configure_chart()

self._fill_chart()

plot.savefig(chart_name)

plot.close()

@staticmethod

def _configure_chart():

plot.rcParams['figure.figsize'] = [10, 8]

plot.title('Sentiment Analysis', fontsize=20)

plot.xlabel('← Negative — — — — — — Positive →', fontsize=15)

plot.ylabel('← Facts — — — — — — — Opinions →', fontsize=15)

def _fill_chart(self):

for _, text in enumerate(self.data_set.index):

x = self.data_set.polarity.loc[text]

y = self.data_set.subjectivity.loc[text]

plot.scatter(x, y, color='Red')The class takes in the constructor the DataFrame returned by the SentimentAnalyzer. The main method - draw configures the chart (sets size, title and axis labels), then it fills it with data by iterating over the DataFrame and adding every entry to the chart by plot.scatter

. You can find more about it in matplotlib documentation. In the end it saves it as a PNG file given by name, sentiment.png

by default.

Charts presented at the start of the article are made by this class. You can see them also in github repository.

It's not that easy to test this class. We could make a chart from specific data and then check if the output file is as expected

but often you don't want to create artifacts (files) in tests as well as it's tricky/complex to do in a test. Let's do something simpler:

from unittest import mock

import pandas

from libraries import visualisation

class TestSentimentPlotter:

@mock.patch('matplotlib.pyplot.savefig')

def test_if_chart_is_drawn(self, savefig):

data = pandas.DataFrame([], columns=[])

plotter = visualisation.SentimentPlotter(data)

plotter.draw()

assert savefig.called

@mock.patch('matplotlib.pyplot.scatter')

def test_if_data_is_plotted(self, scatter):

review = {

'id': 123, 'text': 'zzz', 'rating': 5, 'polarity': 0.9, 'subjectivity': 0.2

}

data = pandas.DataFrame([review], columns=['id', 'text', 'rating', 'polarity', 'subjectivity'])

plotter = visualisation.SentimentPlotter(data)

plotter._fill_chart()

call = scatter.call_args_list[0]

assert call.args == (0.9, 0.2)In the test_if_chart_is_drawn test we mock the matplotlib savefig

method so the file isn't created but we test if it was called. It’s something between a unit test and a smoke test – run the code on a minimal setup/data and check if it won’t throw an exception.

In the test_if_data_is_plotted we mock the scatter

method and test if it got the correct values for correct axis - mismatch can happen and such tests will catch such errors quickly.

Visualization - HTML table

Pandas can save our data as xlsx file but we can play a bit with HTML and provide a table that would be good looking and more understandable by a non-data-scientist, non-developer type of a person - your customer, someone from marketing, customer management/care and alike.

The class is pretty long but it handles some HTML. If we would be using a web framework like Django or Flask all we would have to do is pass a DataFrame to the template context and loop it there with all the HTML and style processing instead of doing it in Python (Python generating HTML to then insert it in a HTML file isn't the cleanest thing - that's why template engines were written).

class SimpleSentimentTable:

template_file = 'assets/template.html'

template_block = '<!-- template -->'

def __init__(self, data_set):

self.data_set = data_set

def save(self, file_name='analysis.html'):

template = open(self.template_file).read()

rows = self._build_rows_html()

html = template.replace(self.template_block, rows)

self._save_file(file_name, html)

def _build_rows_html(self):

rows = self._get_rows()

return '\n'.join(rows)

def _get_rows(self):

data = self.data_set.to_dict(orient='records')

for row in data:

yield self.row_template.format(

tripadvisor_id=row['id'], text=row['text'], rating=row['rating'], subjectivity=round(row['subjectivity'], 2),

polarity=round(row['polarity'], 2))

)

@property

def row_template(self):

return ('<tr>'

'<td>{tripadvisor_id}</td>'

'<td>{text}</td>'

'<td>{rating}</td>'

'<td>{subjectivity}</td'

'><td>{polarity}</td>'

'</tr>')

@staticmethod

def _save_file(file_name, html):

new_file = open(file_name, 'w')

new_file.write(html)

new_file.close()So this class takes the DataFrame in the constructor, then the save method reads a HTML base file I provided and then replaces a HTML comment (value of template_block

property) with the generated HTML and saves under a new name (analysis.html by default). You can check the contents of assets/template.html in the github repo.

The _get_rows method makes all the magic happen. It iterates over the DataFrame and for each row it returns a HTML table row with values in each column (row_template property).

This class gets the job done but I want to add a usability effect to it, a wow factor

that will visually indicate how negative or positive given review is. Green is associated with good, while red with bad so we want to make rows with good reviews green and rows with bad reviews red.

Such task would be a job of a template engine filter/helper at best, but as we aren't using one (yet) I had to sacrifice a bit of good design and implement it in this class:

class SentimentTable:

template_file = 'assets/template.html'

template_block = '<!-- template -->'

def __init__(self, data_set):

self.data_set = data_set

def save(self, file_name='analysis.html'):

template = open(self.template_file).read()

rows = self._build_rows_html()

html = template.replace(self.template_block, rows)

self._save_file(file_name, html)

def _build_rows_html(self):

rows = self._get_rows()

return '\n'.join(rows)

def _get_rows(self):

data = self.data_set.to_dict(orient='records')

for row in data:

yield self.row_template.format(

tripadvisor_id=row['id'], text=row['text'], rating=row['rating'], subjectivity=round(row['subjectivity'], 2),

polarity=round(row['polarity'], 2), color=self._get_css_color(row['polarity'])

)

@property

def row_template(self):

return ('<tr style="background-color: rgba({color});">'

'<td>{tripadvisor_id}</td>'

'<td>{text}</td>'

'<td>{rating}</td>'

'<td>{subjectivity}</td'

'><td>{polarity}</td>'

'</tr>')

def _get_css_color(self, polarity):

ratio = str(self._get_polarity_ratio(polarity))

if polarity >= 0:

color = ('0', '255', '0', ratio)

else:

color = ('255', '0', '0', ratio)

return ', '.join(color)

@staticmethod

def _get_polarity_ratio(polarity):

return round(abs(polarity) / 1.0, 2)

@staticmethod

def _save_file(file_name, html):

new_file = open(file_name, 'w')

new_file.write(html)

new_file.close()_get_rows also calls _get_css_color method (which could be it own class, function as it's different, distinct functionality). If the polarity is 0 or more it will be green, if below - red. Negative comments will be red, positive will be green. But there is more. The color is in RGBA format - first three values determine the color (RGB) while the forth is opacity - 0 is transparent, 1 is opaque. The _get_polarity_ratio method returns that value. The less positive a review is the less green it will appear (more and more transparent green on a white background). Similar the less negative it is the less red it will appear. A cool visual effect that quickly shows polarity value in a visual way.

So all that is left is testing. First we can test if the data HTML was passed to the result file:

class TestSentimentTable:

@mock.patch('libraries.visualisation.SentimentTable._save_file')

def test_if_file_is_saved(self, save_file):

review = {

'id': 123, 'text': 'zzz', 'rating': 5, 'polarity': 0.9, 'subjectivity': 0.2

}

data = pandas.DataFrame([review], columns=['id', 'text', 'rating', 'polarity', 'subjectivity'])

printer = visualisation.SentimentTable(data)

printer.save()

call = save_file.call_args_list[0]

assert call.args[0] == 'analysis.html'

row = ('<tr style="background-color: rgba(0, 255, 0, 0.9);">'

'<td>123</td><td>zzz</td><td>5</td><td>0.2</td><td>0.9</td>'

'</tr>')

assert row in call.args[1]We mock the _save_file so a file isn't created but we can test values of arguments passed to it - the html

argument is the full HTML of the page to be saved so we can check if the data rows are there.

The polarity color values are a perfect candidate for unit tests:

def test_if_returns_polarity_for_bad(self):

printer = visualisation.SentimentTable(None)

result = printer._get_polarity_ratio(-1)

assert result == 1.0

def test_if_returns_polarity_for_half_bad(self):

printer = visualisation.SentimentTable(None)

result = printer._get_polarity_ratio(-0.5)

assert result == 0.5

def test_if_returns_polarity_for_half_good(self):

printer = visualisation.SentimentTable(None)

result = printer._get_polarity_ratio(0.5)

assert result == 0.5

def test_if_returns_polarity_for_good(self):

printer = visualisation.SentimentTable(None)

result = printer._get_polarity_ratio(1)

assert result == 1In few tests we covered almost the whole range of cases for this function. Can you guess what special value isn't tested? Yes, it's zero. In cases where something is greater or lower than zero you have to be aware of the zero itself and handle it. I used greater or equal

conditional so it will be in the green color branch, but as it's zero it will appear as white anyway (fully transparent green).

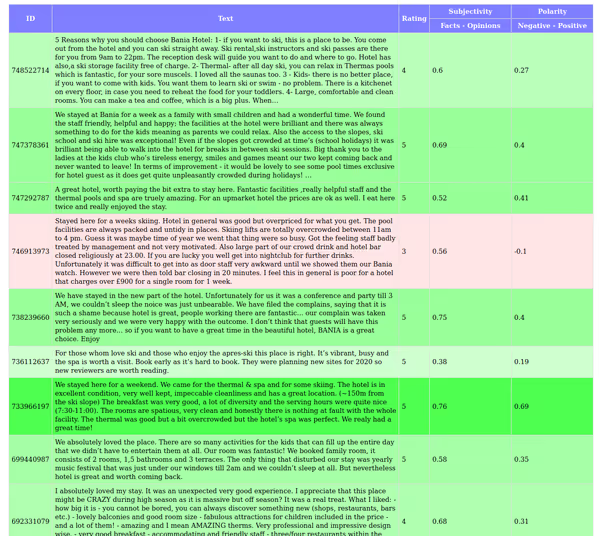

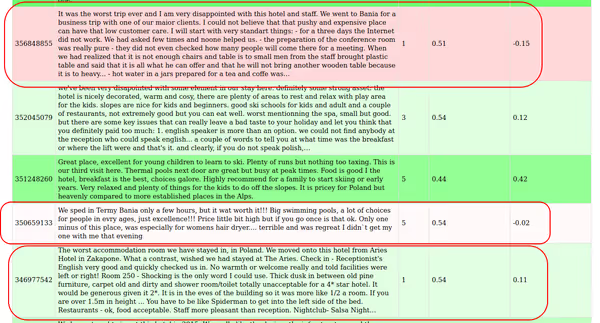

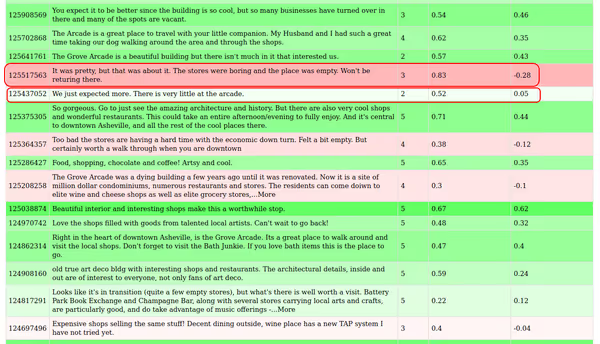

Below you can find some of the results. Full tables are in the repository.

The first marked review is negative and the user left a 1-star review. The last one is also 1-star but the polarity is big higher. Even though a customer wasn't happy he used less negative language in his review. What's interesting is the middle market review. It's a 5-star review yet it has a low polarity - customer was happy, but found things about which he wasn't - such things could be a value feedback for the venue while it could be lost among positive reviews (with 4,5 average you look closely on the negative ones while positive review may get lost among other positive ones).

Going forward

In this article I wanted to showcase sentiment analysis but moreover how to write an application that produces an interesting and valuable result while maintaining clean and tested codebase. All of this code could be shortened to one blob of file but it would be really bad to test, modify or maintain and it would likely end up as copy this code to get a chart from this data

. This example is like 4 classes but in a more feature complete application you would have hundreds if not thousands lines of code that you can't manually test and it has to work, especially with data processing it has to report correct values in correct places. Clean and tested codebase is the key.

Also do note that this code isn’t perfect. There is some refactoring that could be done, especially with the HTML table visualization. Developer always is learning and always can improve.

You can download the whole repository, run the tests, try using your own data or creating a different visualization method for existing data and more.

TripAdvisor reviews can be fetched by some online services/APIs (but for full feature set they will be paid). Similarly Twitter, Facebook, YouTube have their APIs that could give you access to some data that could be analyzed this way.

If you have any questions feel free to ask. You can also check Dhilips article that inspired me to write this article. It uses sentiment analysis on Twitter posts.

Comment article