Managing a machine vision project in PYNQ

It's time for more PYNQ tips and tricks. This time I'll showcase a bit on software distribution, testing and some funny OpenCV face detection app

.

Distributing notebooks

If you created a notebook and you want to share it and make it easy to install then there are few steps you have to follow.

First of all create a repository, github is most recommended here. The goal is to put the notebook in the repository and make it install via simple command like:

Installation is handled by a setup.py file that contains the install instructions. I made a very simple repository that installs a simple notebook - pynq-example-notebook. You can clone/copy it and modify for your needs.

If you check the setup.py it looks like so:

import os

import shutil

import sys

from setuptools import setup, find_packages

package_name = 'example-pynq-notebook' # set your name

notebook_source_folder = 'notebook/'

board_notebooks_dir = os.environ['PYNQ_JUPYTER_NOTEBOOKS']

setup(

name=package_name,

version='0.1', # your version here

description='Just an example notebook', # set the description

author='PYNQ Hero', # and author

url='https://github.com/riklaunim/pynq-example-notebook', # and URL to the repository/home page

include_package_data=True,

packages=find_packages(),

install_requires=[

"pynq"

],

)

def install_notebook(notebook_name):

notebook_path = os.path.join(board_notebooks_dir, notebook_name)

if os.path.isdir(notebook_path):

shutil.rmtree(notebook_path)

shutil.copytree(notebook_source_folder, notebook_path)

if 'install' in sys.argv:

install_notebook(package_name)We want that our notebook shows up on the notebooks list and to do that we have to copy it explicitly. This is handled by install_notebook function that is called during installation. Thanks to PYNQ_JUPYTER_NOTEBOOKS environment variable we know where the notebooks are stored so we can create a new folder there, copy files and done.

The setup function can also be used to define Python requirements. If you need some system requirements then you can call apt via os.system

call.

More advanced projects may need to compile stuff or differentiate code based on target board. This also can be done. You can check setup.py files of other projects, like Xilinx/PYNQ-HelloWorld to get an example how to do these things.

Testing within notebooks

When writing code it's good to have some tests ready to quickly validate given piece of logic. For Jupyter notebooks we can use ipytest package that brings pytest tests to notebooks.

To get it running we have to install ipytest. For the default PYNQ-Z2 board I've noticed some dependencies were to old and not compatible, so you have to update them. At the time of writing this article these versions were working for me:

Then import it in desired notebook:

import pytest

import ipytest

ipytest.autoconfig()Then you can create a block with test functions and make it execute like so:

ipytest.clean_tests()

def test_if_it_works():

assert 2 == 2

ipytest.run('-qq')And lets look at an example function I've wrote to get bigger face box than what detection returned:

def expand_face_coordinates_if_possible(face, image, expand_by=60):

image_width, image_height = image.size

x, y, width, height = face

x = x - expand_by

if x < 0:

x = 0

y = y - expand_by

if y < 0:

y = 0

width = width + expand_by * 2

if width + x > image_width:

width = image_width - x

height = height + expand_by * 2

if height + y > image_height:

height = image_height - y

return x, y, width, heightThis function takes face detection (x, y, width, height) and the source image object (Pillow). Returns expanded face coordinates by given amount of pixels. Testing it live on some captured frame would take to much time to check all cases and even more problematic when it would be a part of much complex flow. So lets write some tests:

ipytest.clean_tests()

def test_if_coordinates_are_expanded():

image = PIL.Image.new('RGB', (400, 400))

face = (100, 100, 50, 50)

result = expand_face_coordinates_if_possible(face, image, expand_by=20)

assert result == (80, 80, 90, 90)

def test_if_left_side_edge_is_handled():

image = PIL.Image.new('RGB', (400, 400))

face = (0, 0, 50, 50)

result = expand_face_coordinates_if_possible(face, image, expand_by=20)

assert result == (0, 0, 90, 90)

def test_if_right_side_edge_is_handled():

image = PIL.Image.new('RGB', (400, 400))

face = (350, 350, 50, 50)

result = expand_face_coordinates_if_possible(face, image, expand_by=20)

assert result == (330, 330, 70, 70)

ipytest.run('-qq')Tests can also be used to showcase how a part of code works based on provided test data instead of like a frame captured from HDMI - which may or may not be available when someone is browsing the notebook, or has no matches on the frame from the camera. Here is an example:

This a function that crops the face from the image, draws deep pink overlay and adds a text written with Comic Sans:

def draw_meme(image, face):

image = crop_face(image, face)

image = PIL.ImageOps.expand(image, border=20, fill='deeppink')

w, h = image.size

overlay = PIL.Image.new('RGB', (w, h + 60), (255, 20, 147))

overlay.paste(image, (0, 0))

font_size = 30

if w < 200:

font_size = 20

draw = PIL.ImageDraw.Draw(overlay)

font = PIL.ImageFont.truetype("/home/xilinx/jupyter_notebooks/ethan/data/COMIC.TTF", font_size)

draw.text((20, h),"PYNQ Hero!",(255,255,255),font=font)

return overlayIn normal code flow it would need an image, like a frame from a camera, face detection run on it and so on. But a notebook can be provided with additional files that can be used to test/showcase such smaller pieces:

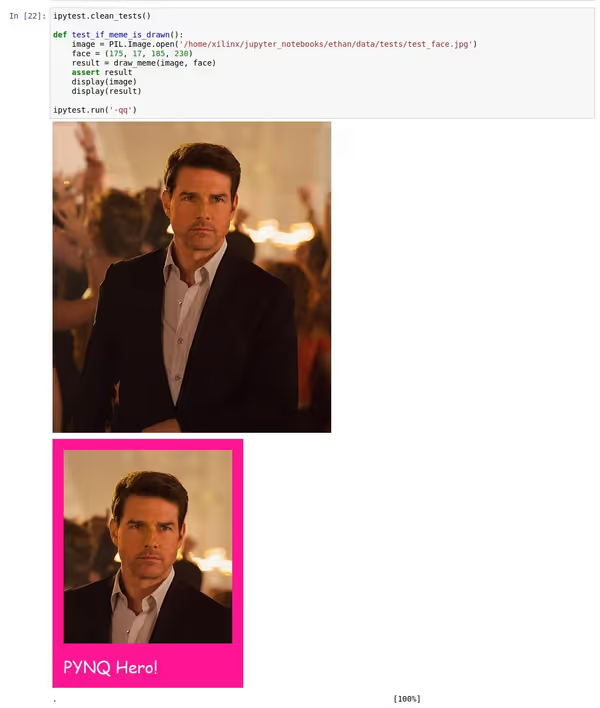

ipytest.clean_tests()

def test_if_meme_is_drawn():

image = PIL.Image.open('/home/xilinx/jupyter_notebooks/ethan/data/tests/test_face.jpg')

face = (175, 17, 185, 230)

result = draw_meme(image, face)

assert result

display(image)

display(result)

ipytest.run('-qq')Here we manually provide the image and coordinates of the face. We also use IPython.display.display to force-display the images:

And having test image we can do a quick test against external libraries we use, like face detection:

def detect_faces_on_frame(frame):

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

return face_cascade.detectMultiScale(gray, 1.3, 5)

ipytest.clean_tests()

def test_if_face_is_detected():

image = PIL.Image.open('/home/xilinx/jupyter_notebooks/ethan/data/tests/test_face.jpg')

frame = npframe.array(image)

faces = detect_faces_on_frame(frame)

assert len(faces) == 1

x, y, w, h = faces[0]

assert [x, y, w, h] == [213, 94, 119, 119]

ipytest.run('-qq')Machine vision project - meme generator

You should know where it is going... we are going to make a meme generator as a machine vision project. Our PYNQ-Z2 board will read frames from HDMI-IN, detect faces and if present draw memes, put them on a single frame and display that on HDMI-OUT. The full notebook is available on riklaunim/pynq-meme-generator.

We start with the general setup:

from time import sleep

from pynq.overlays.base import BaseOverlay

from pynq.lib.video import *

base = BaseOverlay("base.bit")hdmi_in = base.video.hdmi_in

hdmi_out = base.video.hdmi_out

hdmi_in.configure(PIXEL_RGB)

hdmi_out.configure(hdmi_in.mode, PIXEL_RGB)

hdmi_in.start()

hdmi_out.start()And then the logic:

run = 0

memes_displaying = False

while run < 10:

print(run)

frame = hdmi_in.readframe()

image = get_image_from_frame(frame)

faces = detect_faces_on_frame(frame)

created_memes = []

for face in faces:

meme = draw_meme(image, face)

created_memes.append(meme)

if created_memes:

print('Memes detected', len(created_memes))

output_image = display_memes_on_one_frame(created_memes)

output_frame = npframe.array(output_image)

outframe = hdmi_out.newframe()

outframe[:] = output_frame

hdmi_out.writeframe(outframe)

memes_displaying = True

else:

if not memes_displaying:

print('Displaying source')

hdmi_out.writeframe(frame)

sleep(1)

run += 1We do a loop over 10 frames - for each frame we try to detect faces and if so we make memes out of them (crop, draw the outline and text), then place all memes on a 1080p image that will be then sent as the output. If no faces are detected we just display the original frame so it's easier to see what's going on and why no faces were found.

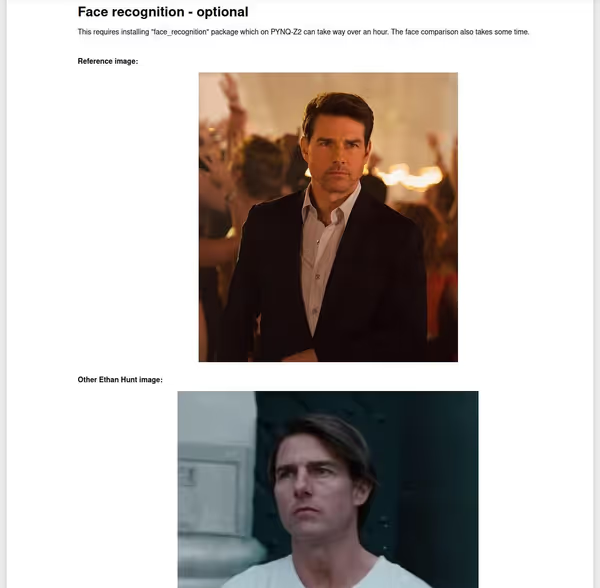

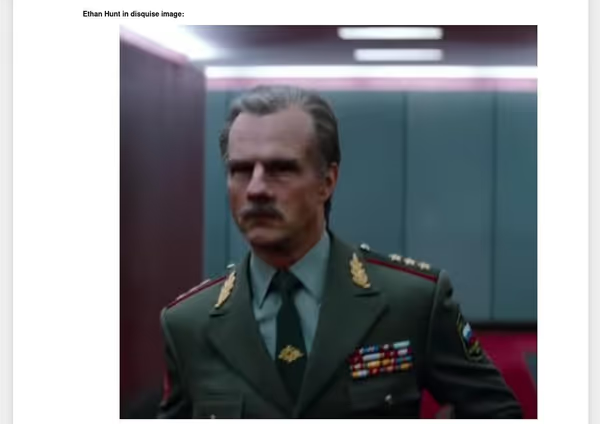

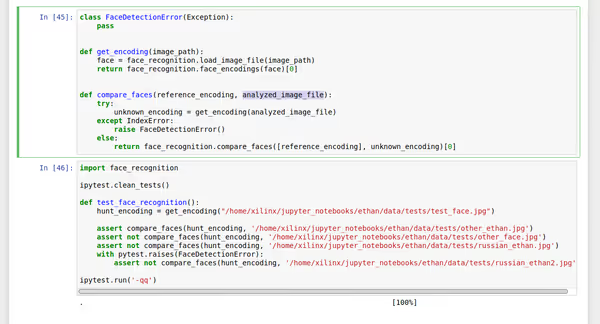

Face comparison and recognition

There are Python libraries like face-recognition that can compare/recognize faces. It can run on PYNQ-Z2 although installation will take more than hour while the process itself won't be to fast either as it's not accelerated. If you would want to do it quickly then either detected and cropped faces would have to be sent to the main server capable of doing this computation, or maybe trying to use a USB-based NPU to get some edge acceleration of some similar implementation.

face-recognition can be used like so:

import face_recognition

known_image = face_recognition.load_image_file("reference_image.jpg")

known_encoding = face_recognition.face_encodings(known_image)[0]

unknown_image = face_recognition.load_image_file("unknown_image.jpg")

unknown_encoding = face_recognition.face_encodings(unknown_image)[0]

results = face_recognition.compare_faces([known_encoding], unknown_encoding)The result is a one element list with True/False value depending if the faces are assumed of same person. If face can't be detected then no encoding will be returned.

You can get the full notebook from riklaunim/pynq-meme-generator github repository.

Comment article